ChatGPT, an AI-driven application that stands for "generative pre-trained transformer," is the newest star on social media as it attempts to respond to queries in a human-like manner.

The program was developed by a corporation named OpenAI, and was released to the public on November 30, 2022, it’s intended to provide human-like replies to a variety of inputs by applying algorithms.

While AI researchers may be aware of the existence of a highly effective language super brain, this is the first time such a powerful tool has been made accessible to the general public through a free, user-friendly online interface.

According to Varun Mayya, CEO of software development firm Avalon Labs, "ChatGPT seems like a human being."

As viral videos show it writing Shakespearean poetry, meditating about philosophical issues, and finding defects in computer codes, it has recently taken over social media.

These examples are noteworthy because they are of such high calibre that anybody might have authored them. Furthermore, ChatGPT is not even the best AI; OpenAI is apparently developing a superior model that could be made available as early as 2023.

“This is like the early era of the internet, right in the 1990s where everyone is like, ‘Is this a thing? Is this not a thing,” said Mayya CEO of Avalon Labs, who has been building software for ten years. “But now, beyond a doubt, it is a thing. It’s very ground-breaking.”

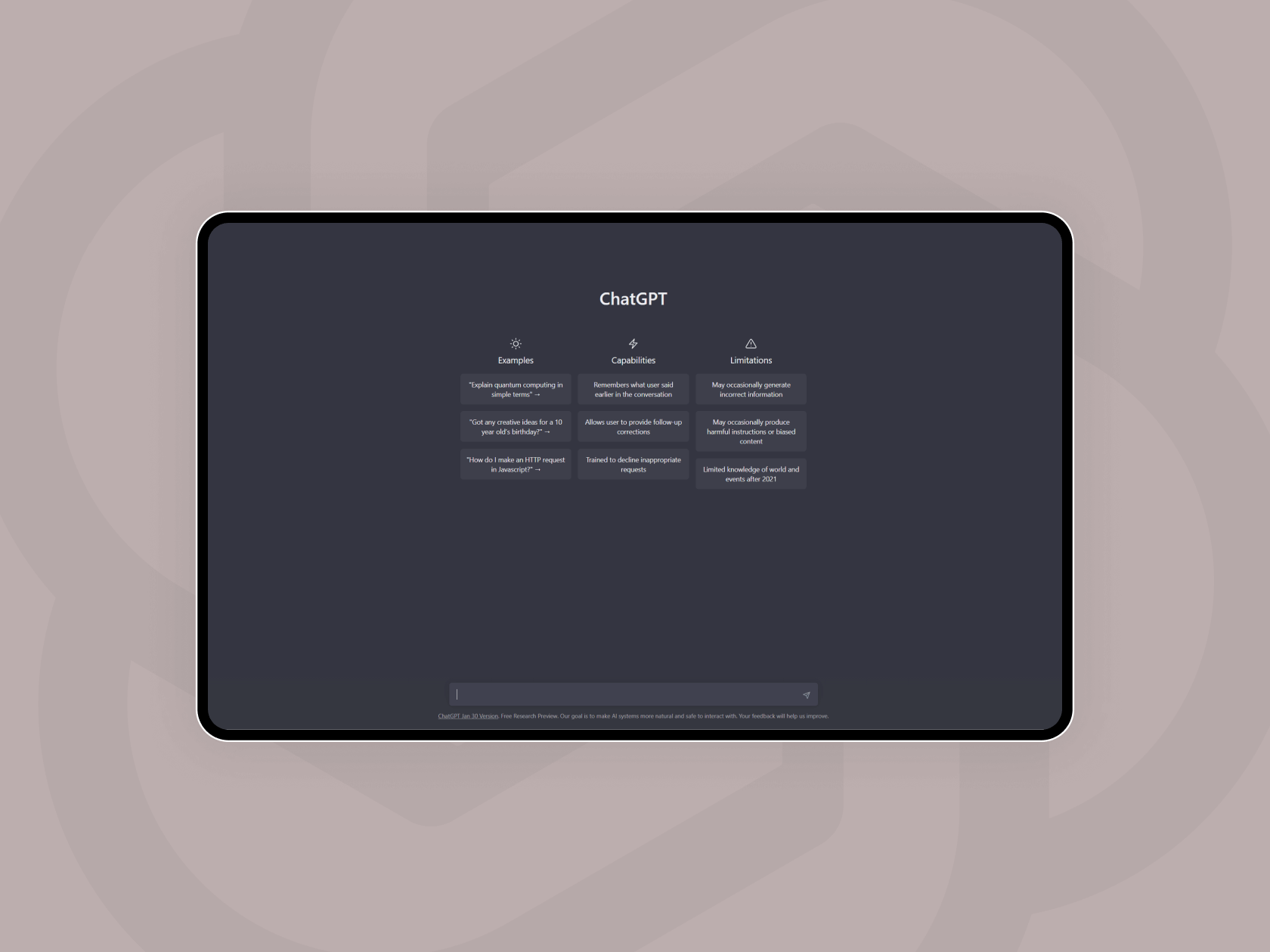

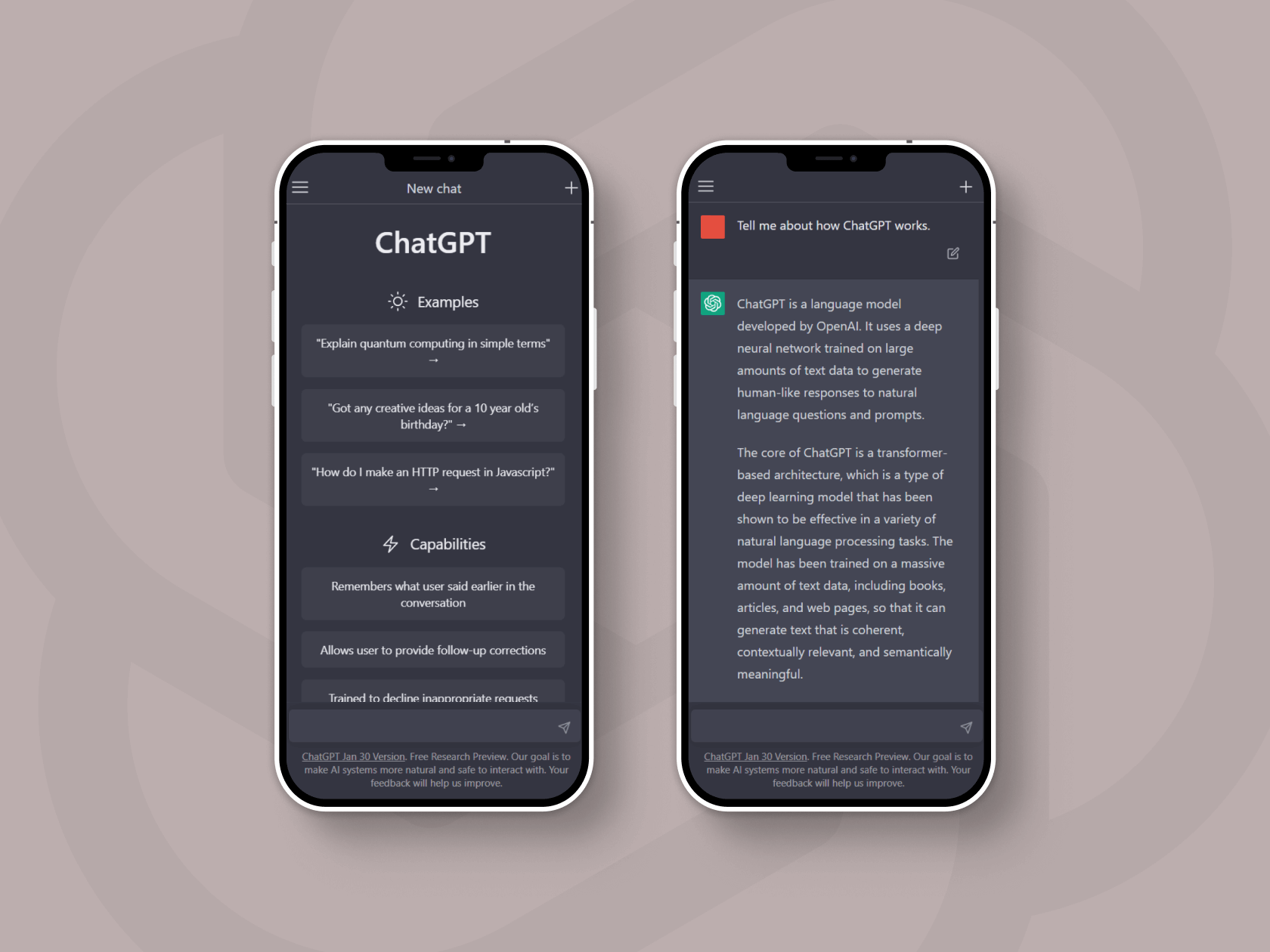

What features does ChatGPT have?

Since the software is still in its incubation stage, there are currently two types of users: those using it functionally (like this product designer who used the bot to create a fully functional notes app) and those using it leisurely (to do things like make the bot condemn itself in the style of Shakespeare).

Here are some of its features:

- ChatGPT, which employs the GPT-3.5 language technology, has been trained using a significant quantity of text data from several sources.

- ChatGPT engages in conversational interaction; this style of discourse enables the bot to:

1.Respond to any further inquiries

2.Acknowledge its errors

3.Dispute false premises

4.Turn down unsuitable requests

5.ChatGPT is a sister model of InstructGPT, which has been taught to follow a prompt for an instruction and give a thorough answer

-

Most significantly, ChatGPT can generate complex Python code and write college-level essays in response to a prompt, raising concerns that such technology may eventually replace human workers like journalists or programmers.

-

Its knowledge base ends in 2021, it frequently gives incorrect answers, repeats itself, and it claims it can't answer a question but can when given a slightly modified version.

ChatGTP’s principle of operation

Similar to InstructGPT, Reinforcement Learning from Human Feedback (RLHF) was used to train this model, with a few minor variations in the data collecting arrangement. Supervised fine-tuning was used to train an initial model by having human AI trainers act as both the user and the AI assistant in chats. The trainers were provided with access to sample writing recommendations to assist them in creating their replies.

A comparison data was needed, which included at least two model replies ordered by quality, in order to build a reward model for reinforcement learning. Chatbot interactions that AI trainers conducted with it to get this data was used. A model-written statement was chosen at random, sampled a number of potential conclusions, and AI trainers were asked to rate them. Proximal Policy Optimization can be used to adjust the model using these reward models. This method was iterated upon multiple times.

The limitations of ChatGPT

On the one hand, yes, ChatGPT is capable of producing answers that looks convincing. But on the other hand, what it means to be convincing depends on the context.

Limitations include:

- ChatGPT occasionally provides responses that are valid yet erroneous or illogical. Fixing this problem is difficult because: (1) There is currently no source of truth during RL training; (2) Making the model more cautious makes it decline questions that it can answer correctly; and (3) Supervised training causes the model to be misled because the ideal response depends on the model's knowledge rather than the demonstrator's knowledge.

- ChatGPT is sensitive to repeated attempts at the same question. For instance, if the question is asked in one way, the model may pretend to not know the answer, but if the question is asked in a different way, the model may respond properly.

- The model frequently employs unnecessary words and phrases, such as repeating that it is a language model developed by OpenAI. These problems are caused by over-optimization problems and biases in the training data (trainers favor lengthier replies that appear more thorough).

- In an ideal scenario, the model would provide clarifying queries in response to unclear user input. Instead, our present models typically make assumptions about what the user meant.

- Although work has been done to make the model reject unsuitable requests, there are still moments when it'll take negative instructions or behave inimically. Although some false negatives and positives are anticipated for the time being, leveraging the Moderation API can be used to alert users or prohibit specific categories of hazardous material.

How it appeared

Altman, Musk, and other Silicon Valley investors created OpenAI in 2015 as a non-profit organization dedicated to artificial intelligence research. OpenAI modified its legal status to become a "capped-profit" business in 2019, which means that it reduces investment returns after a particular threshold.

Due to a conflict of interest involving OpenAI and the autonomous driving research being conducted with Tesla, Musk resigned from the board of directors in 2018. He nevertheless continues to invest, and he expressed his enthusiasm for ChatGPT's debut. He commented, "ChatGPT is frighteningly excellent." The AI chatbot ChatGPT wasn't the first to be developed. Many businesses, including Microsoft, have experimented with chatbots, but they haven't had much luck. When Microsoft's Tay bot was first introduced in 2016, Twitter users allegedly taught it sexist and racist language in less than 24 hours, ultimately leading to its extinction.

When BlenderBot 3 was made available in August, Meta made its first foray into the chatbot industry. According to Mashable, the bot, like Tay, came under fire for disseminating racial, antisemitic, and misleading information, including the assertion that Donald Trump won the 2020 presidential election.

OpenAI has deployed Moderation API, an AI-based moderation system, to help developers determine whether language violates the company's content policy, which prevents harmful or unlawful material from being sent, in order to prevent these kinds of controversies. OpenAI acknowledges that their moderation still has issues and isn't perfect.

In conclusion

Does all of this indicate that AI will rule humanity? Not just yet, but OpenAI's Altman is convinced that human-like intelligence in AI is not that far off. However, in order to achieve a level of optimum performance, AI and ML models will require a lot of training and fine-tuning which can only occur through human efforts.

The developer also noted that the software is not always accurate and it can provide responses that are either not true or illogical but come across as reasonable or factual, which can perpetuate the distribution of false information.

In closing, ChatGPT is unable to fully comprehend the nuanced nature of spoken and written human language. It is just taught to produce words depending on the input, however it lacks the capacity to fully understand the meaning of these words. This indicates that, when compared to those of a genuine human being, any replies it produces are likely to be superficial and devoid of depth and understanding.