The global FinOps market size was $13.44 billion in 2024 and is expected to grow to $38.33 billion by 2034, with a CAGR of 11.05%. This is primarily due to the widespread adoption of cloud technologies across various industries and the need for businesses to maintain cost transparency as they scale. At the same time, the most expensive resources cloud vendors provide include GPU workloads, which are typically used for training AI models, real-time rendering, scientific simulations, and data analytics. Below, as a company specializing in the implementation of practices on cost management in the cloud, we’ll discuss those that will help you reduce this expense and make it more predictable.

What Are GPU workloads?

GPU workloads are computational tasks that require the specialized capabilities of graphics processing units, specifically parallelism (instead of traditional CPUs, which are used for sequential data processing). As we noted above, these tasks primarily include training neural networks, high-performance computing, big data analysis, rendering, and simulations.

Because GPU instances are a limited resource, their cost is relatively high. This can be explained primarily by the high cost of manufacturing and integrating such chips for cloud providers. Moreover, GPUs require infrastructures with high performance. Add to this the need for these instances to run continuously, and it becomes clear why vendors pass on these costs to end users through higher hourly or per-second pricing, which, in turn, leads to exponential increases in monthly bills.

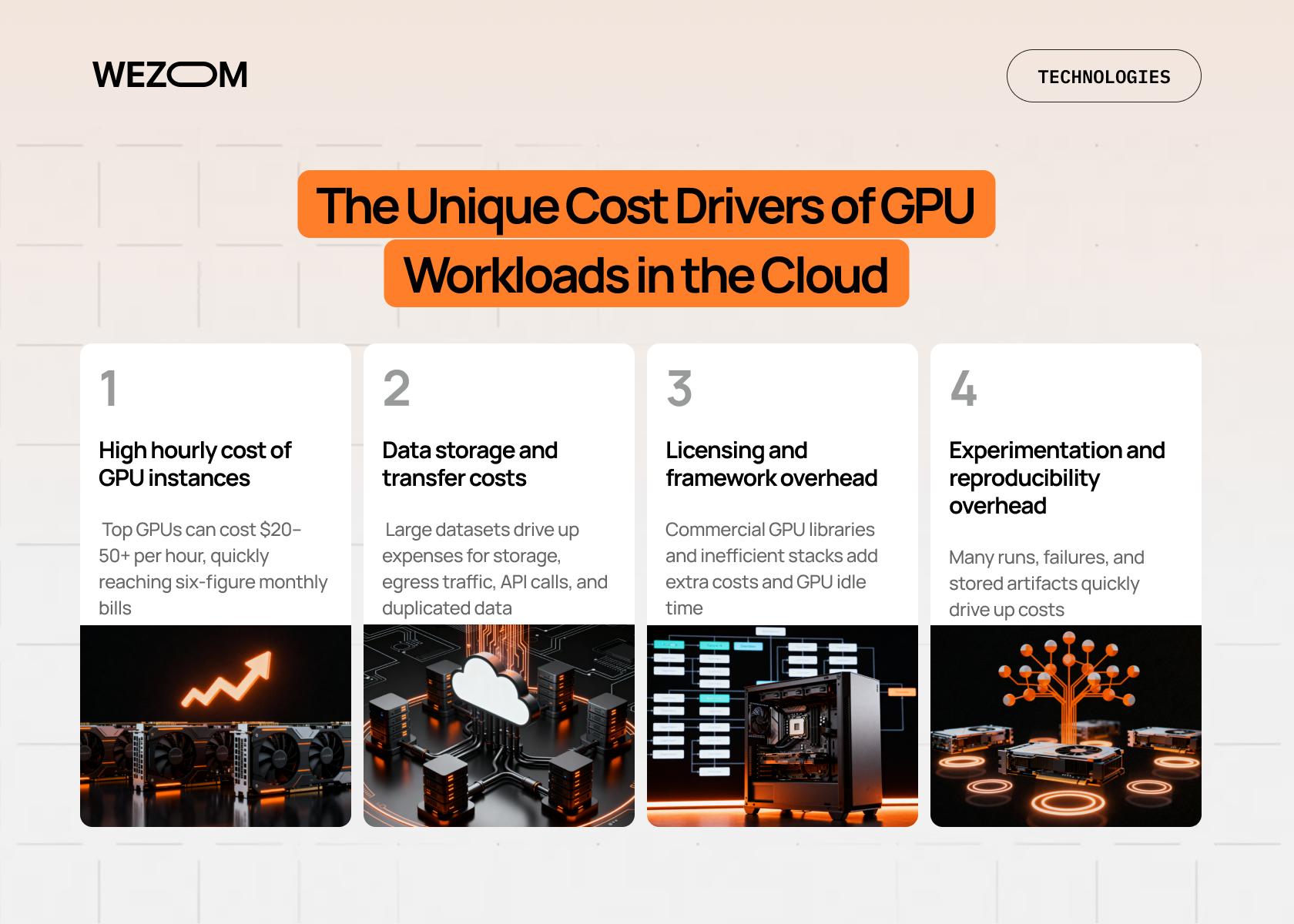

The Unique Cost Drivers of GPU Workloads in the Cloud

High costs for GPU workloads are typically driven by more than one factor:

- High hourly cost of GPU instances. Instances with top-end GPU models can cost tens of times more than CPUs alone: for example, the hourly cost of an instance with several modern GPUs can exceed $20-50 or more, which, when used 24/7 for a month, can easily reach six figures.

- Data storage and transfer costs. Deep learning training often involves processing terabytes of data, which in turn increases costs in several ways: when transferring data from the cloud (i.e., in the case of egress traffic), when making API requests, and when copying it multiple times (for example, for different experiments).

- Licensing and framework overhead. Using GPU-optimized commercial libraries or enterprise MLOps platforms can add significant additional licensing costs. Additionally, inefficient configurations or an outdated software stack (simply because the GPU will be idle waiting for data or CPU operations) can add costs.

- Experimentation and reproducibility overhead. Failed experiments, as well as the need to A/B test various hyperparameters or models, require launching multiple instances, either simultaneously or sequentially. This, in turn, without automatic instance termination after the task is completed, will also burn through your budget. Add to this the need to store all artifacts for reproducibility, and it becomes clear that data storage alone can significantly inflate your budget as well.

However, all these cost drivers can be reduced, particularly with a well-thought-out FinOps strategy.

Why Cloud Cost Optimization Is Important?

Optimizing cloud GPU workload costs is essential, directly impacting development and business financial sustainability. Here's why:

- Uncontrolled cloud costs limit your business’s scalability. When the cost of training a single model skyrockets, this creates a financial barrier to launching new projects or increasing the frequency of retraining. Meanwhile, FinOps ensures that financial constraints don't become a bottleneck for your technical growth.

- Cost awareness drives engineering efficiency. When MLOps engineers gain visibility into cost dynamics, they can more easily identify more efficient algorithms and optimized architectures, resulting in reduced model training time, informed instance selection, and minimized GPU downtime.

- Having well-thought-out cloud cost management strategies ensures better forecasting and budgeting. With constantly changing cloud pricing, inaccurate forecasts can lead to budget shortfalls or, conversely, expensive unused reserves. At the same time, FinOps provides precise data on the specific costs per model or inference, allowing you to calculate realistic budgets and obtain a more accurate ROI for each ML project.

- Compliance and budget governance become easier. In highly regulated sectors, cost transparency by department and specialist is crucial. With proper implementation of FinOps, you can quickly attribute costs to specific business units in accordance with your financial policies, thereby preventing unauthorized resource use.

Of course, these are not all the reasons to implement FinOps cloud cost management, especially for GPU workloads. However, even a month after optimization, you’ll be able to notice the first positive changes.

FinOps Strategy for MLOps: Aligning Cost & Value

FinOps is an approach that integrates people, processes, and tools to achieve maximum financial efficiency in cloud solutions. In the context of MLOps, it ensures that every dollar spent on GPU resources delivers maximum business value. FinOps typically consists of three phases:

- Inform. The primary goal of this phase is to ensure complete cost visibility. This is achieved through detailed accounting, resource tagging by projects, teams, and owners, and the creation of unit cost reports using cloud cost management dashboards and BI systems.

- Optimize. This phase is focused on cost reduction and includes practices such as GPU rightsizing, the use of spot instances, reserved instances, and savings plans.

- Operate. The goal of this phase is to maintain the achieved financial efficiency through automation. This includes, in particular, the creation of automated utilization rules (including the automatic shutdown of idle GPUs), regular cost reviews, and ongoing training for teams in financial discipline.

In the context of MLOps, FinOps brings the following benefits to businesses:

- Accelerated time-to-market due to a clear understanding of optimal costs (meaning you can more quickly select instances for specific tasks);

- Increased ROI due to reduced operational expenses;

- Closer collaboration between engineering, finance, and product teams.

Ultimately, the main improvement guaranteed by cloud cost management and optimization ensured with FinOps is the shift from the question: "How much are we spending?" to the question: "What value are we getting for this spend?" Because sometimes a model with 95% accuracy trained for $100 turns out to be much more valuable to the business than a model with 96% accuracy trained for $1,000.

Cloud Cost Optimization Strategies & Best Practices for GPU Workloads

Effective cost control for GPU workloads requires a combination of financial tooling and in-depth technical optimizations. This primarily involves:

- Rightsizing GPU instances. Instead of defaulting to using only the most powerful available GPU, you need to analyze actual utilization. For example, if your AI model is only 50% of VRAM and isn't using all the compute power, you should downgrade to an instance with a smaller or less expensive GPU type. It's worth noting that this process should be ongoing and based on VRAM utilization and SM activity metrics.

- Using spot/preemptible GPU instances. Spot (in AWS), Preemptible (in Google Cloud), or Low-Priority (in Microsoft Azure) instances offer discounts of up to 70-90% off the on-demand price because they leverage excess cloud capacity. Although they can be interrupted with short notice, they can still be used for low-load/fault-tolerant MLOps tasks such as large-scale hyperparameter search, non-urgent pretraining, or batch inference.

- Autoscaling workloads. Using managed services allows you to automatically scale up the number of GPU instances during peak loads and down to zero during idle periods. This is especially important for inference (when serving end users), where the workload varies throughout the day.

- Scheduling and workload batching. Scheduling implies training models only when absolutely necessary (and implementing automated shutdown of idle instances during off-peak hours) – this can save you up to 50% of your budget. Batching means combining multiple small experiments to maximize GPU utilization, rather than launching them simultaneously. Ultimately, this is more cost-effective because you avoid wasting time on repeated initialization.

- Optimizing ML models. Simplifying the computational requirements of the model itself directly reduces the need for expensive GPU resources. This is achievable through quantization (switching from 32-bit or 16-bit computational precision to 8-bit for inference), pruning (lightening AI models), and distillation (training a small, fast model using the predictions of a larger, slow-working model).

If you are interested in implementing these and a number of other more specific FinOps practices for MLOPS, feel free to contact us.

Best Cloud Cost Management & Optimization Tools

Implementing cloud cost management best practices is impossible without tools aimed at providing cost visibility and automating instance selection. Specifically, we're talking about the best cloud cost management tools.

Kubecost

Kubecost is one of the best FinOps tools. It was created for teams using Kubernetes to orchestrate GPU instances. Using Kubernetes alone makes it impossible to answer the question, "How much exactly does Team 1 spend on training their new model, and how much does Team 2 spend on inference?", as the invoice only displays the total cost for the entire cluster. Kubecost, on the other hand, performs cluster-wide analysis, allocating costs to each specific resource and accounting for associated costs (including memory, network, and storage) associated with a specific GPU.

CloudHealth by VMware

In a nutshell, it's a cost management platform designed for businesses following a multi-cloud approach. In particular, since managing Reserved Instances or Savings Plans across multiple clouds is a complex task requiring manual effort, CloudHealth can aggregate and normalize cost data from all clouds into a single report. For example, it can help you automate current GPU usage and get instant recommendations for buying or selling reservations to maximize discounts. It also includes rightsizing so you can promptly upgrade to a cheaper GPU instance that's sufficient for a specific task.

Finout

Finout was created to transform actual cloud costs into understandable metrics. So, if you frequently find yourself wondering why your cloud bill has suddenly increased by several thousand dollars in the last month, you can use Finout. It will extract all the necessary data from the cloud, combine it with data from internal systems, and automatically create a virtual cost map that will clearly explain the bill's increase. Ultimately, the main value of this solution is the ability to quickly calculate the cost of a GPU per unit (whether per user or model).

Infracost

Infracost is a platform that integrates financial discipline directly into the development process through seamless interaction with IaaS management tools like Terraform. The problem it solves lies in the next: typically, an MLOps engineer first writes code to launch a new GPU instance, deploys it, and only a few days or weeks later, the FinOps team discovers that an unreasonably expensive GPU type was selected. Infracost, on the other hand, automatically comments on each code change request, explaining to engineers how much a given configuration will cost and suggesting other, more cost-effective instance options.

Benefits of Cloud Cost Optimization for GPU Workloads

A comprehensive FinOps strategy for GPU workloads offers several benefits, including:

- Wise cloud resource allocation, thanks to eliminating expensive instance downtime, increasing the use of spot instances, and authorizing GPU resources;

- Improved cloud utilization, achieved by ensuring that each expensive GPU operates at maximum efficiency and minimizes downtime;

- Predictable budgeting and forecasting, enabled through detailed cost-per-unit reporting and the ability to accurately forecast the cost of launching the next MLOps project;

- Faster deployment and scaling, due to eliminating delays associated with cloud budget freezes because of misunderstandings about the cost of a particular instance;

- Enhanced cross-team collaboration and accountability, which you can reach through fostering a unified culture of financial responsibility between engineering teams and management.

If you'd like to implement FinOps best practices for cloud cost optimization into your IT infrastructure, write or call us, and we guarantee that you'll begin saving on GPU workloads within the first few months of adopting the new culture and policies.

FAQ

What is cloud cost control for GPU workloads?

Essentially, it’s a set of processes and tools for managing, monitoring, and optimizing financial costs for expensive GPU instances, as well as the storage and networks that ensure their operation.

How does FinOps apply to MLOps and GPU infrastructure?

FinOps in this context means introducing cloud cost control into the development and operation of ML models, ensuring financial transparency at every stage. This includes tagging GPU resources, automating the shutdown of idle instances, and providing engineers with data on the cost of model training.

Why is cloud cost optimization important for GPU workloads?

Because GPU instances have a high cost, their suboptimal operation (for example, due to frequent downtime) can quickly deplete a company's budget, preventing it from further scaling through the cloud.

How often should organizations review and optimize GPU workloads' costs?

Cost analysis should be performed daily or weekly as part of the Operate FinOps phase – this way, you’ll be able to address issues before they become a significant drain on your cloud budget. Optimization (usually involving reviewing Reserved Instances and Savings Plans, as well as selecting new GPU architectures) should be performed quarterly, whenever workloads change or more cost-effective instance types become available.

What are common mistakes teams make when managing GPU cloud costs?

The most common mistakes include no automatic shutdown, always using the most powerful GPU regardless of workload intensity, underutilization of spot instances, and poor tagging across projects and/or teams.