1 million users in just 5 days after launch. Science fiction? No, it’s a real case of ChatGPT.

ChatGPT reached its first 100 million monthly users in just 2 months. For comparison: Instagram needed 2.5 years to do the same. Breakthrough? Phenomenon? Absolutely.

Statistics show us that this technology doesn’t just work — it has already become a standard. Still, some businesses avoid implementing ChatGPT and similar AI solutions. Their arguments: it's complicated/it's expensive/it's not serious/we prefer "human decisions"/we don't want to be dependent, etc.

At Wezom, we actively use different neural networks to build intelligent solutions: from content generation in marketing to automating routine tasks. And we want to share our experience — how to use the OpenAI API, step by step and in plain language.

Presenting our OpenAI GPT integration tutorial. Here's what you’ll get:

- A simple step-by-step explanation of what OpenAI API is and how to connect it

- Code examples + OpenAI API key setup + common use cases

- Tips for avoiding pitfalls

- Security and optimization best practices

Let’s start with this: OpenAI use is not limited to chat-based Q&A. It also includes working with images (DALL·E), audio (Whisper), data vectorization (Embeddings), model customization (Fine-tuning), and content moderation (Moderation API).

Understanding this allows you to build smarter, cross-media products. In short, our guide will be useful to developers, marketers, designers, bloggers, managers, business owners, and startup founders.

OpenAI API Architecture Explained Simply

Everyone wants to know how to use OpenAI as effectively as possible. We believe it's important to first understand what happens when you send a request and how the model "understands" what is being asked.

In this section, we explain what OpenAI API is in action.

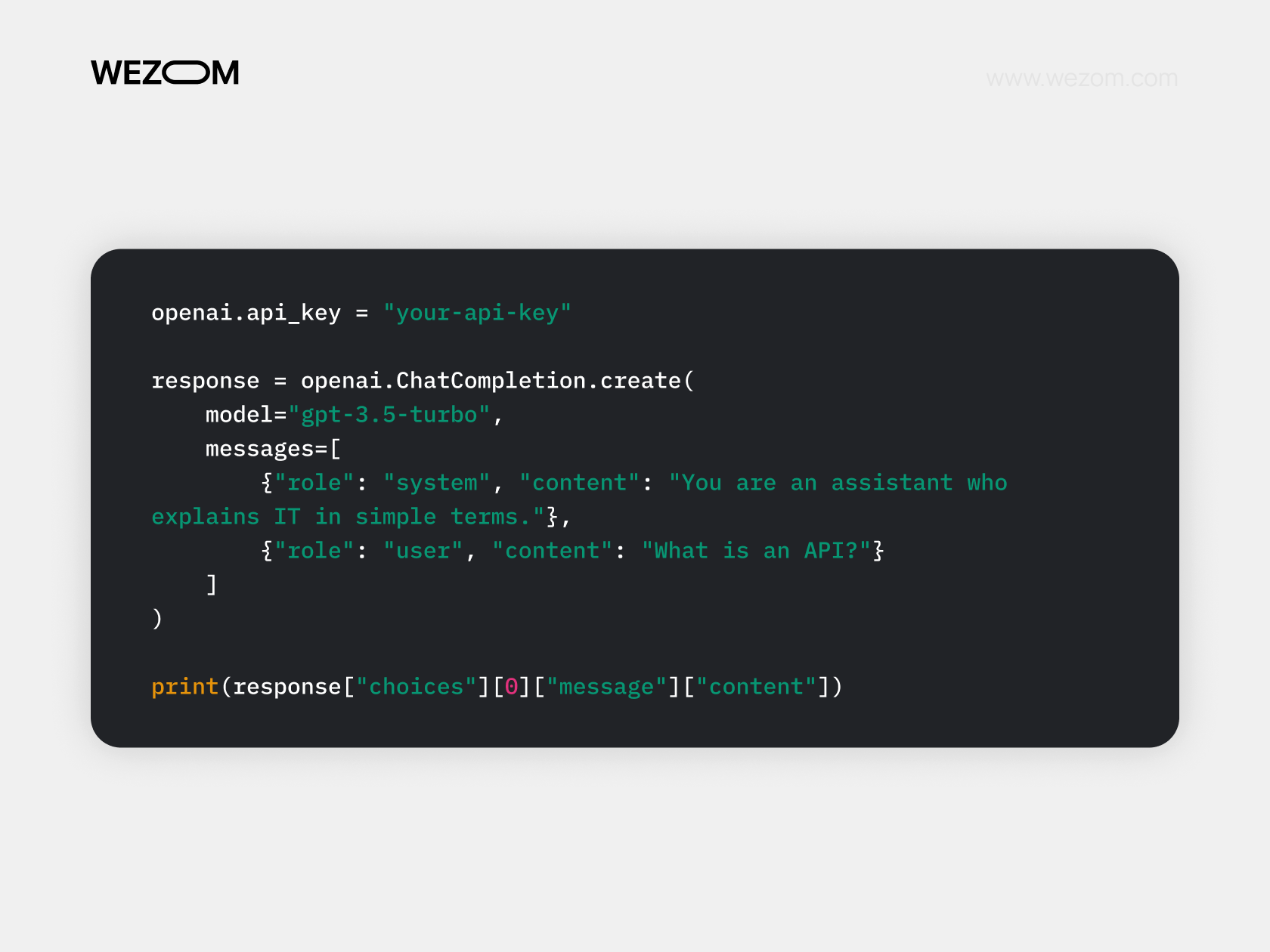

Each time you send a request, let’s say through the OpenAI GPT-4 API console, you’re essentially "talking" to the model: you define its role, context, and a question. The model processes this text, analyzes it, and based on its training, generates a response.

A request contains:

- model

- array of messages

- creativity settings (temperature, max_tokens)

The model returns a JSON response containing the generated text.

When your request reaches OpenAI’s server:

- The tokenizer splits the text into tokens: these are not words, but parts of words. Example: developer → de, velop, er.

- The model (GPT-4 or GPT-3.5) processes the tokens using transformers — a neural network architecture trained on terabytes of text. It "weighs" each word against others to grasp meaning and context.

- The output is generated token by token. The model "predicts" the next token based on the previous ones.

These tokens are then assembled into text → and sent back to you.

Supported Models and Their Use Cases

GPT-4, GPT-3.5-turbo, gpt-4-vision, text-embedding, whisper… At first glance, it seems like you need to read dozens of OpenAI tutorials to understand it all. But we believe this one table will be enough.

| Category | Model Name | Features/Capabilities | Use Cases |

| Text (LLM) | gpt-3.5-turbo | Fast chatbot, cheap, supports ChatCompletion | MVP, bots, content generation |

| gpt-4 | More accurate, smarter, handles | Data analysis, legal assistants | |

| gpt-4o | Multimodal (text, voice, image), most flexible assistant | Assistants, customer support, RAG | |

| Images | dall-e-2 | Image generation from prompts | Social media, marketing |

| dall-e-3 | Handles complex prompts, inpainting (image editing) | Covers, design, illustrations | |

| Embeddings | text-embedding-ada-002 | Turns text into vectors for search, clustering, matching | Semantic Search, RAG, personalization |

| Speech → Text | whisper-1 | Multilingual speech recognition, audio transcription | Subtitles, interviews |

| Text → Speech | tts-1, tts-1-hd | Speech from text, realistic voices and intonation | Voiceovers, podcasts, dubbing, accessibility |

| Input: Images | gpt-4-vision-preview | Analyzes images (photos, graphs, UI) with text | Visual input, PDF analysis, UX checks |

Getting Started with OpenAI API

No matter your use case, using OpenAI always starts the same way — creating an account and obtaining your key.

We won’t go deep into the first steps. You’ll figure out the official website without trouble. You can register via email, Google, or Microsoft account. Phone number verification is required (use a VPN if your region isn’t supported).

Once you're in:

- In the top right corner, go to “API Keys”

- Click “Create new secret key”

- Name your key (e.g., MyFirstProject)

- Copy the key (you'll only see it once!)

The key will look like this: sk-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

To use OpenAI API in your projects: include the key in your dev environment settings, environment variables, or code (e.g., when using a Python library).

OpenAI API Authentication and Key Management

To avoid key leaks, unauthorized access, and unexpected charges, it's crucial to set up proper authentication, access control, and monitoring.

In requests, include the Authorization header (HTTP) or use a Python library.

For safe API key storage, we recommend using .env files.

.png)

You can also use your cloud provider’s tools (e.g., AWS Secrets Manager / Google Cloud Secret Manager) or simply store the info in a password manager.

When it comes to how to use OpenAI for teams, our advice is simple — use separate keys for separate projects. Also, leverage OpenAI Organizations (in your settings) and assign roles to team members:

- Owner (full access)

- Member (API only)

- Read-only (analytics only)

Important! Set rate limits at the app level or via a proxy/firewall to avoid hitting quotas and being blocked.

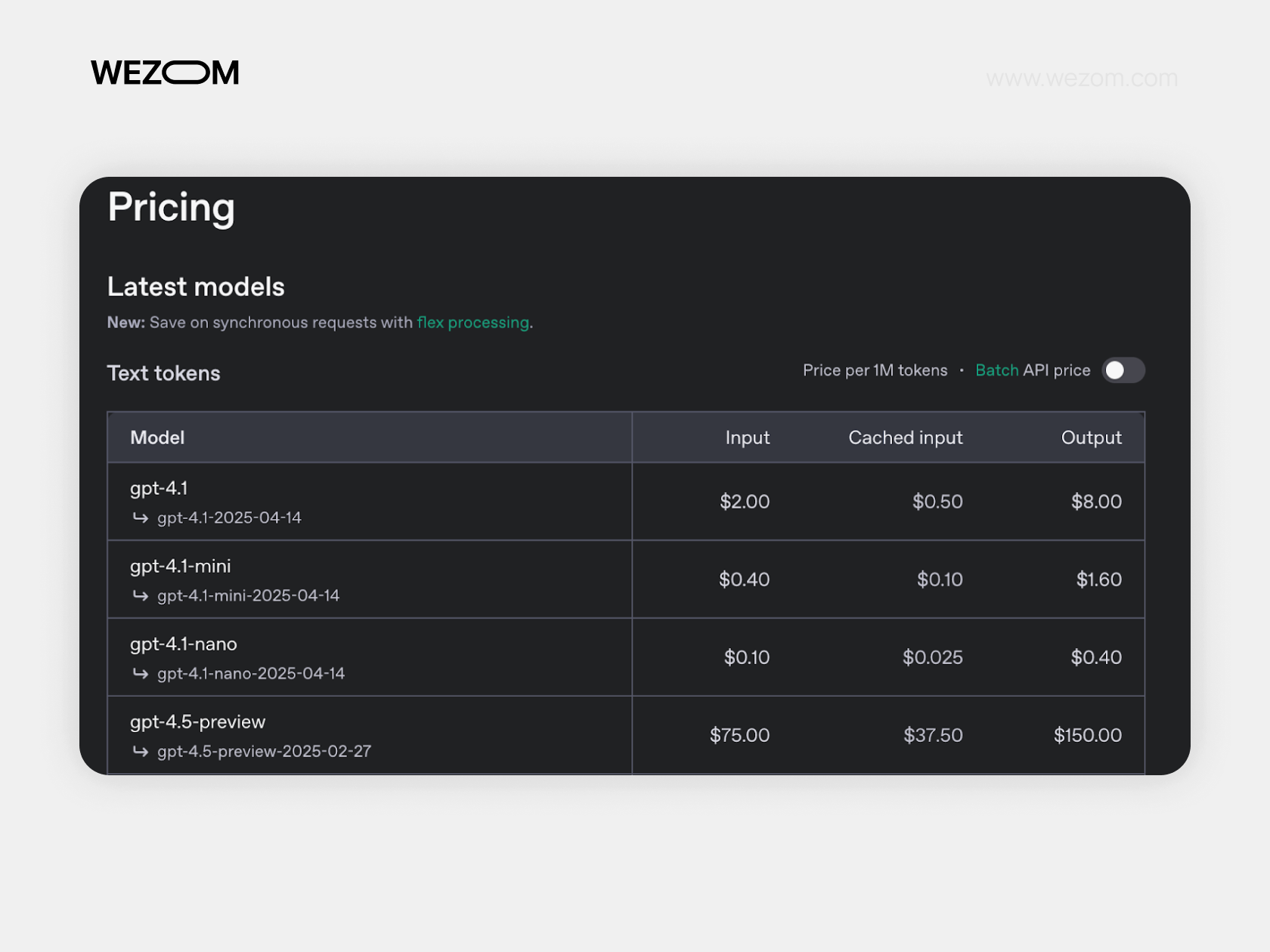

Understanding OpenAI Pricing and Limits

Before going further, we recommend exploring the OpenAI pricing plans.

Usually at this point, you’ll be wondering how to buy OpenAI API access at the best rate for your goals. But there are no traditional plans like free/standard/PRO. Let’s unpack the pricing model and what it means to get OpenAI API token.

Input — tokens you send to the model: your prompt, chat history, system instructions, context. Example:

“Write a description of a startup that uses AI to generate presentations.” These count as input tokens and are priced around $0.01 per 1,000.

Output — tokens you receive in response. The longer the text, the more output tokens. GPT-4 Turbo costs $0.03 per 1,000 output tokens.

Cached Input — reused tokens. This is a clever feature: if you call the model with context it has seen before, OpenAI caches it and doesn't charge full price again.

On one hand, this allows you to use the OpenAI API more deeply and flexibly, carefully analyzing potential costs. On the other hand, the system might seem complicated and confusing if you're encountering it for the first time. In any case, the OpenAI API documentation is open and available at any time, you can always find an answer to your question.

Overview of OpenAI Use Cases

Now it's time to talk about how to use the OpenAI API from a practical perspective: through examples and real-world tasks you might face. We didn’t turn this into a strict classification. So what follows is a kind of mix of possible use cases, based on the “author’s choice” principle.

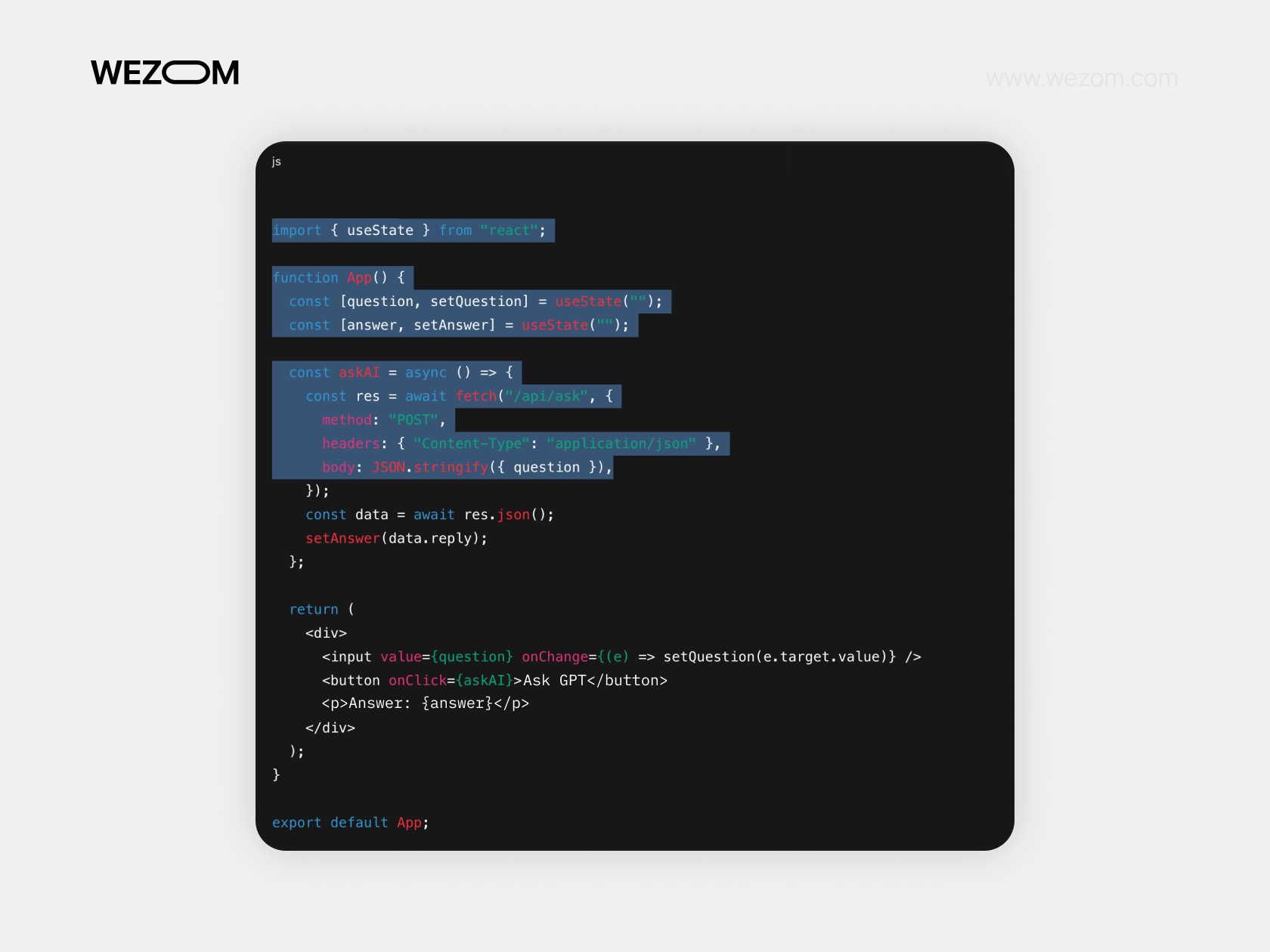

Integrating OpenAI API into Web Applications

It’s a way to teach your product (a website or a service) to communicate with artificial intelligence: generate text, analyze messages, suggest solutions to users, or even write code. Simply put, this is when a button on your website “summons” the power of AI.

You already know what the OpenAI API is and what it’s capable of. So you can probably guess how this integration might be useful:

- to enrich functionality: from chatbots to auto-replies for emails;

- to test ideas without major investments: MVPs, customer support, AI interfaces;

- for content personalization, data summarization, and report generation;

- to generate content on demand, directly in a CMS or on a landing page.

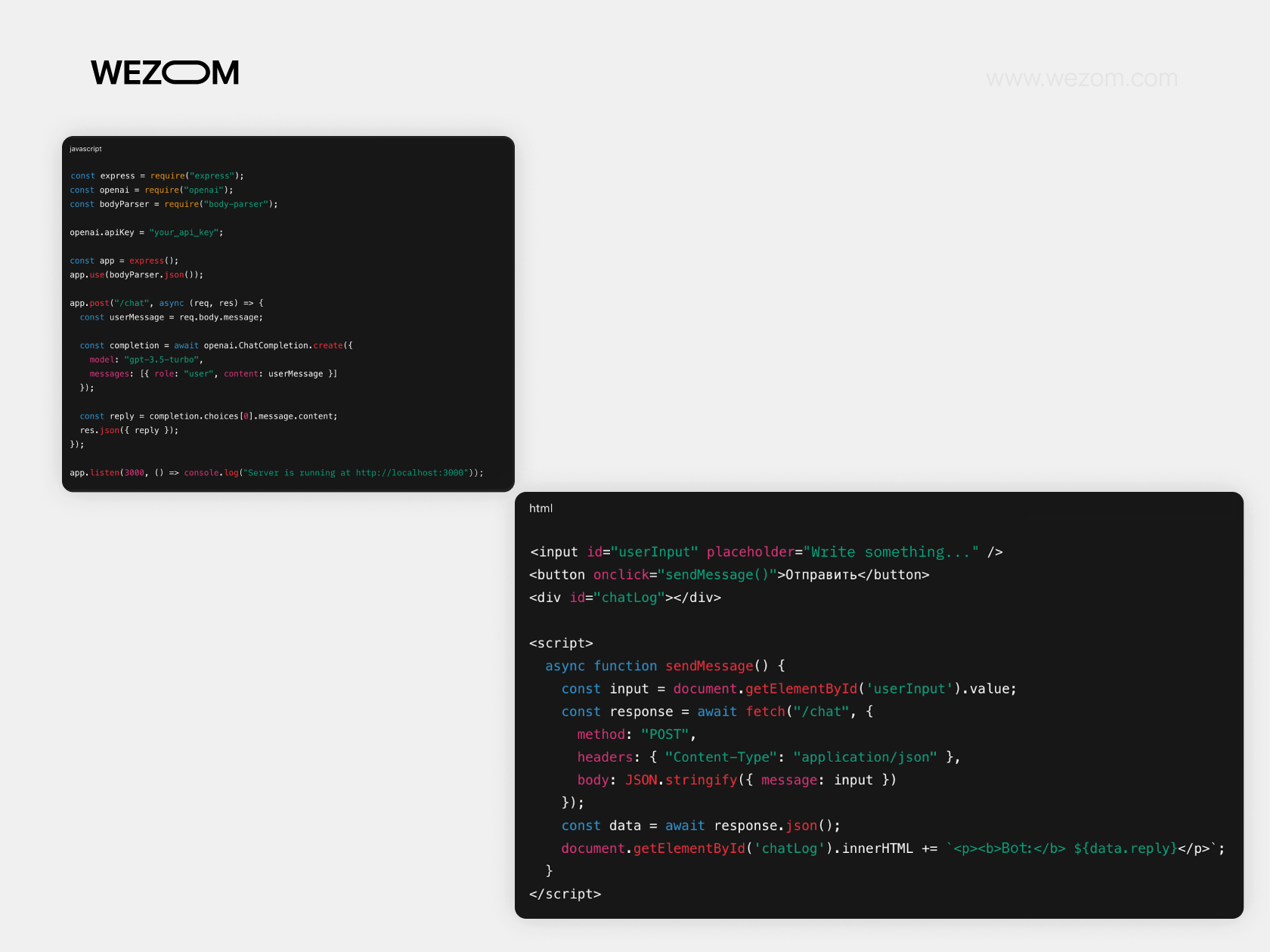

But! As we’ve already discussed, you must not embed the API key into the frontend (due to the risk of exposing it). The solution is to handle this through the backend.

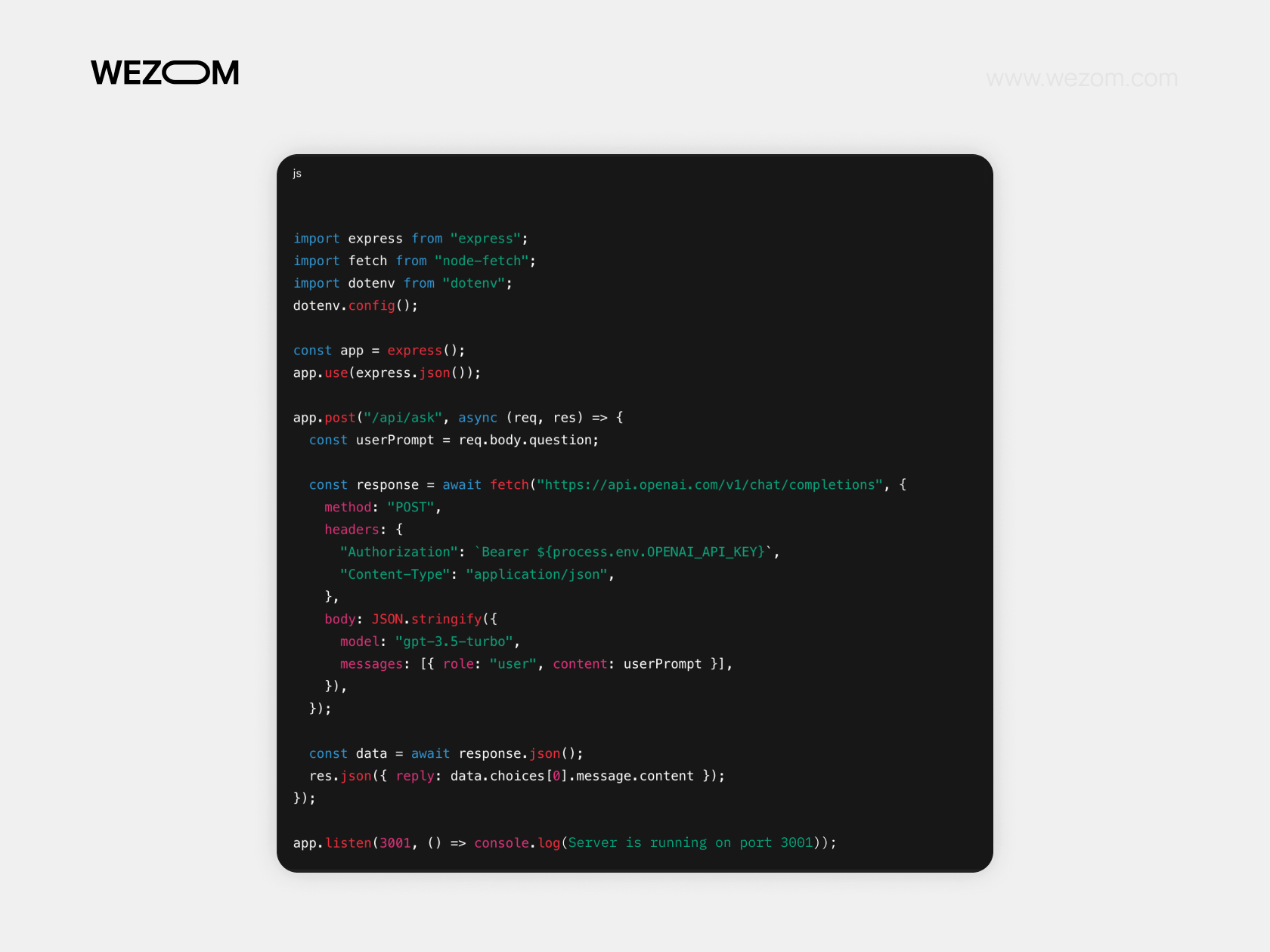

Example: A Chat with GPT via React + Node.js

If you want the responses to appear “live,” as in ChatGPT — this is called streaming. In this case, data arrives in chunks, and you display it as it's generated. Possible both in Node.js and in Python (using FastAPI, Flask, etc.).

Using OpenAI for SaaS, Automation, and DevTools

The OpenAI API offers powerful tools for building Software-as-a-Service (SaaS) products, automating business processes, and developing tools for developers (DevTools).

Key OpenAI use cases for software development:

- automated customer support (chatbots, helpdesks);

- content generation and marketing personalization;

- analysis and classification of requests, reviews, tickets;

- translation and localization of content;

- intelligent search and recommendation systems;

- code writing and validation automation;

- generation of documentation and test cases for developers.

For such tasks, GPT-4 is a great fit. It can even be integrated into a code editor or CI pipeline.

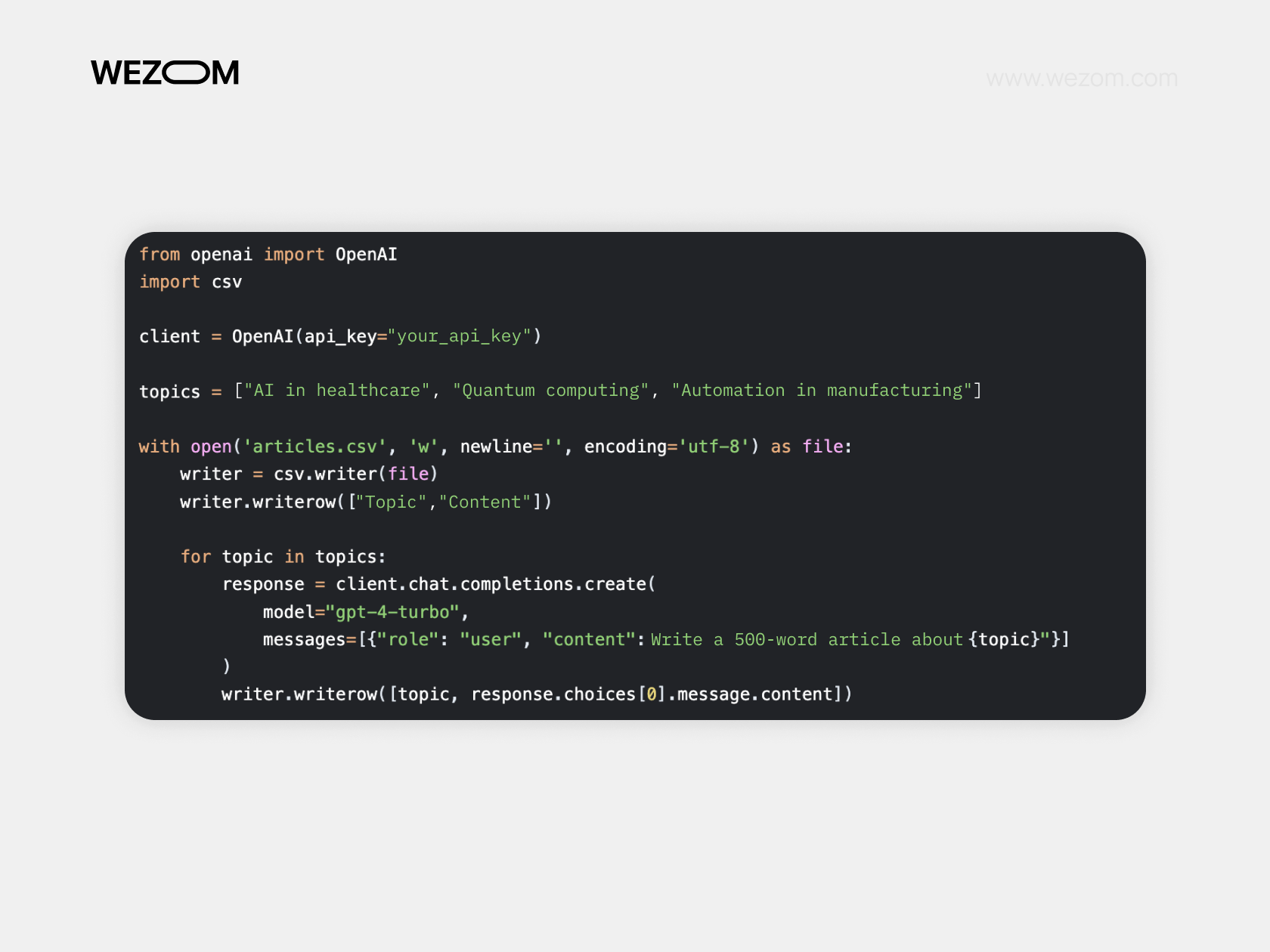

Text generation via OpenAI API

One of the most powerful and in-demand features among OpenAI use cases. All it takes is sending an API instruction (prompt), and the model generates a relevant piece of text. Here’s our modest TOP 5 tips to get the most relevant and high-quality response:

1 . Send structured prompts using roles (system, user):

messages=[

{"role": "system", "content": "You're a marketing expert. Keep your answers short and to the point"},

{"role": "user", "content": "How can I increase landing page conversions??"}

2 . Adjust max_tokens to match your task:

1 token ≈ 1 word in English, ~0.5–2 characters in Cyrillic.

For longer texts, request the response in parts.

3 . Control temperature:

0.2 — for accurate, technical answers.

0.7 — for creativity (storytelling, ideas).

4 . Use streaming for chat interfaces.

5 . For complex tasks (document generation, CRM integration), combine the API with other tools (Zapier, Make).

Also possible: AI Content Generation at Scale.

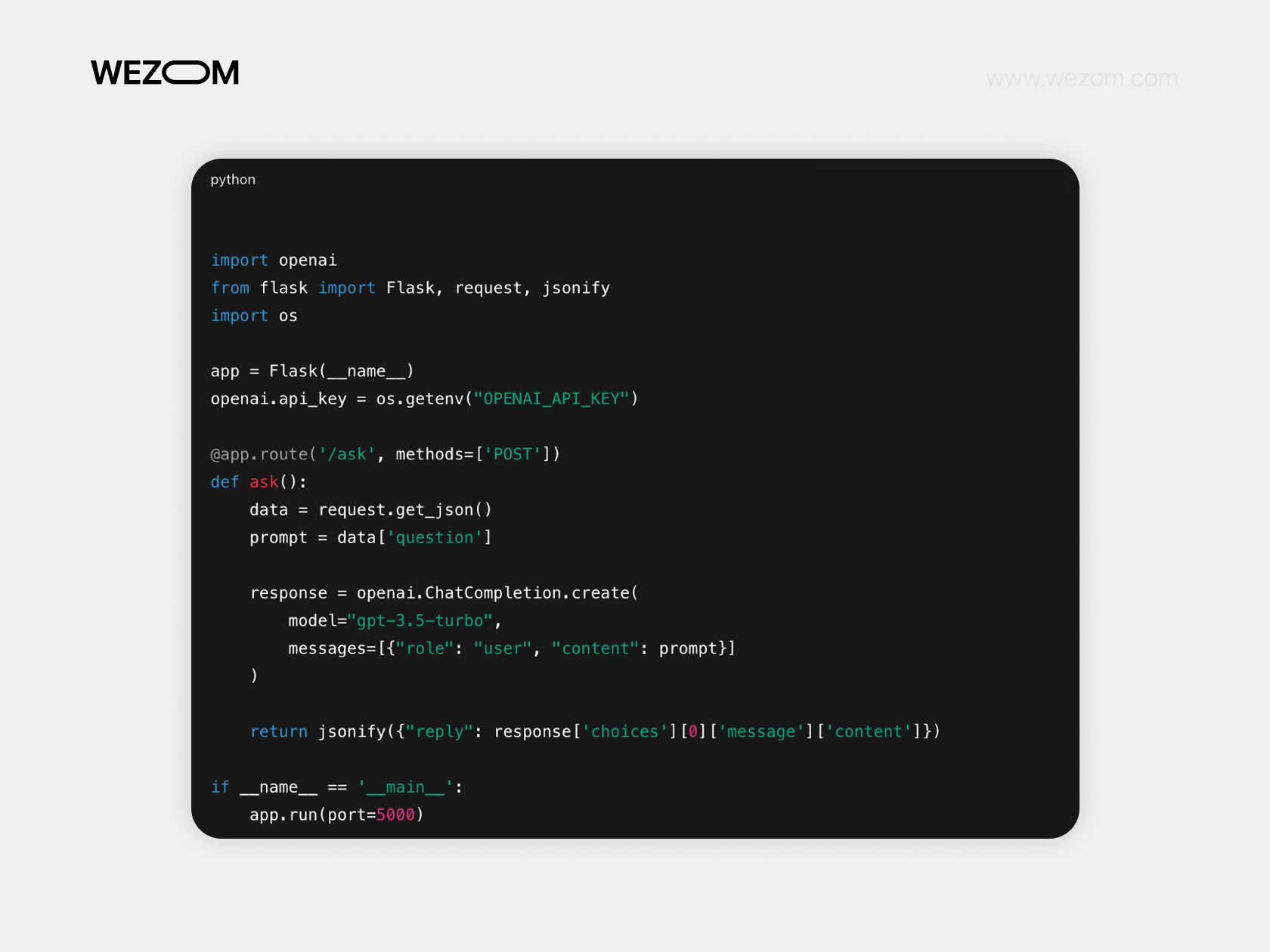

Chatbot Integration

Building your own chatbot is a great way to automate user interaction and create an interactive interface without complex logic. With GPT-3.5 or GPT-4, your bot can engage in meaningful dialogue, answer questions, help users navigate your site, assist customers, and even generate text on the fly. A quick OpenAI tutorial for this:

- Set up a backend (Node.js/Python) that sends requests to OpenAI

- Build a frontend (React/Telegram/WhatsApp) for user interaction

Tips for improvement! Maintain message history in the messages array (role: "user" and role: "assistant") for coherent conversations. Limit max_tokens and temperature to manage length and creativity.

Add a “bot is typing” indicator to enhance the user experience.

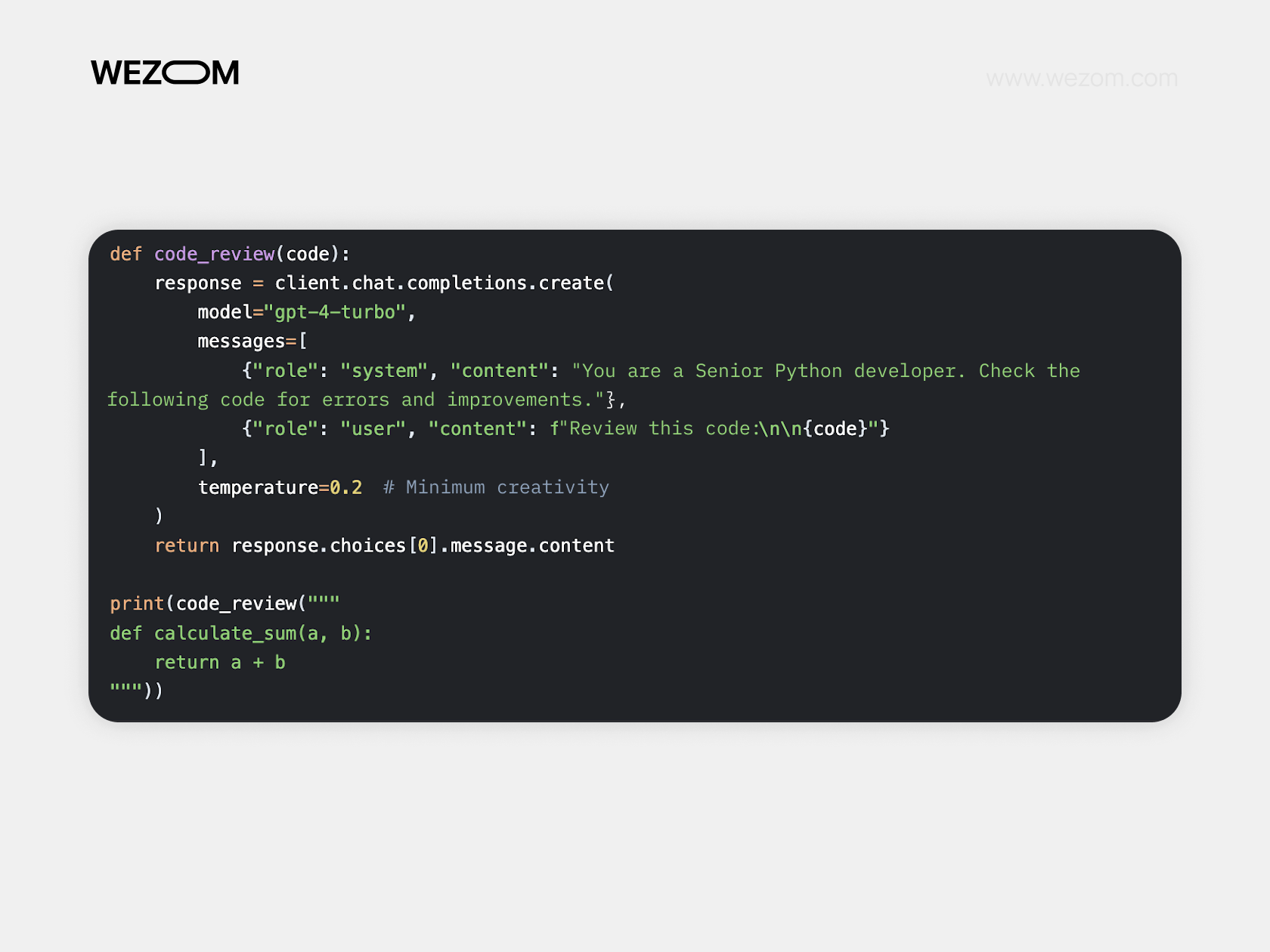

Code Completion and AI Pair Programming

Another way of using OpenAI API for technical professionals. It’s an age-old problem: code reviews and vulnerability detection take time. But with AI, you get near-full automation and real-time suggestions for solutions.

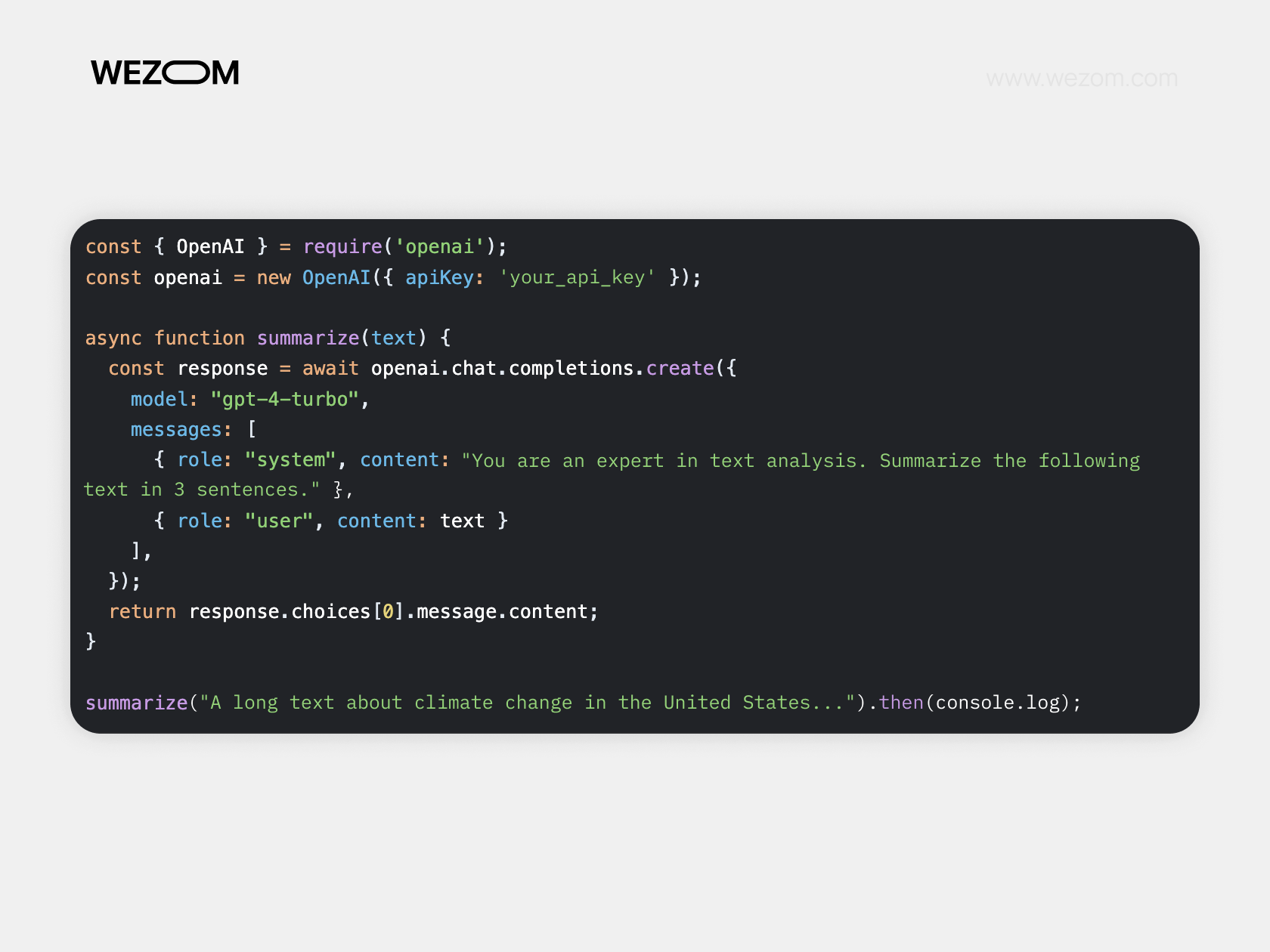

AI-Powered Search and Summarization

A simple solution when large documents (PDFs, articles, letters) need to be analyzed — cases where manual search is not only inefficient but practically impossible. AI can extract key information, generate summaries, and perform vector searches (via the Embeddings API) for semantic analysis. It can be especially useful for:

- law firms: analyzing court decisions and contracts

- financial companies: processing news, reports, transactions

- HR departments: summarizing resumes, finding suitable candidates

- medical institutions

- education sector

Let us emphasize once again: what’s described in this section is not intended as a full-fledged OpenAI API tutorial. The range of AI and ML applications is much broader than what we could cover here. Still, the use cases mentioned are among the most common in our experience.

Using OpenAI with Other APIs and Platforms

OpenAI can be integrated with other platforms via Zapier or direct API calls. Zapier allows no-code connections between OpenAI and Gmail, Slack, Notion, Google Sheets, CRM systems, and more, for example: to generate emails, summarize notes, send auto-replies, or create content from templates.

How to set it up:

- Create a Zap (automation script):

- Trigger: e.g. a new message in Slack

- Action: send data to OpenAI (via “OpenAI” or “Webhooks by Zapier”)

- Output: result goes to the desired tool — Google Docs, CRM, email, etc.

Tip! For more complex logic, use Webhooks by Zapier + OpenAI API.

With Slack, Notion, or CRM systems (like HubSpot or Salesforce), OpenAI helps automate routine tasks: composing replies, analyzing messages, enriching notes, writing follow-ups. Through Zapier, this works visually, without code. Developers can also build bots and workflows using Python and official APIs.

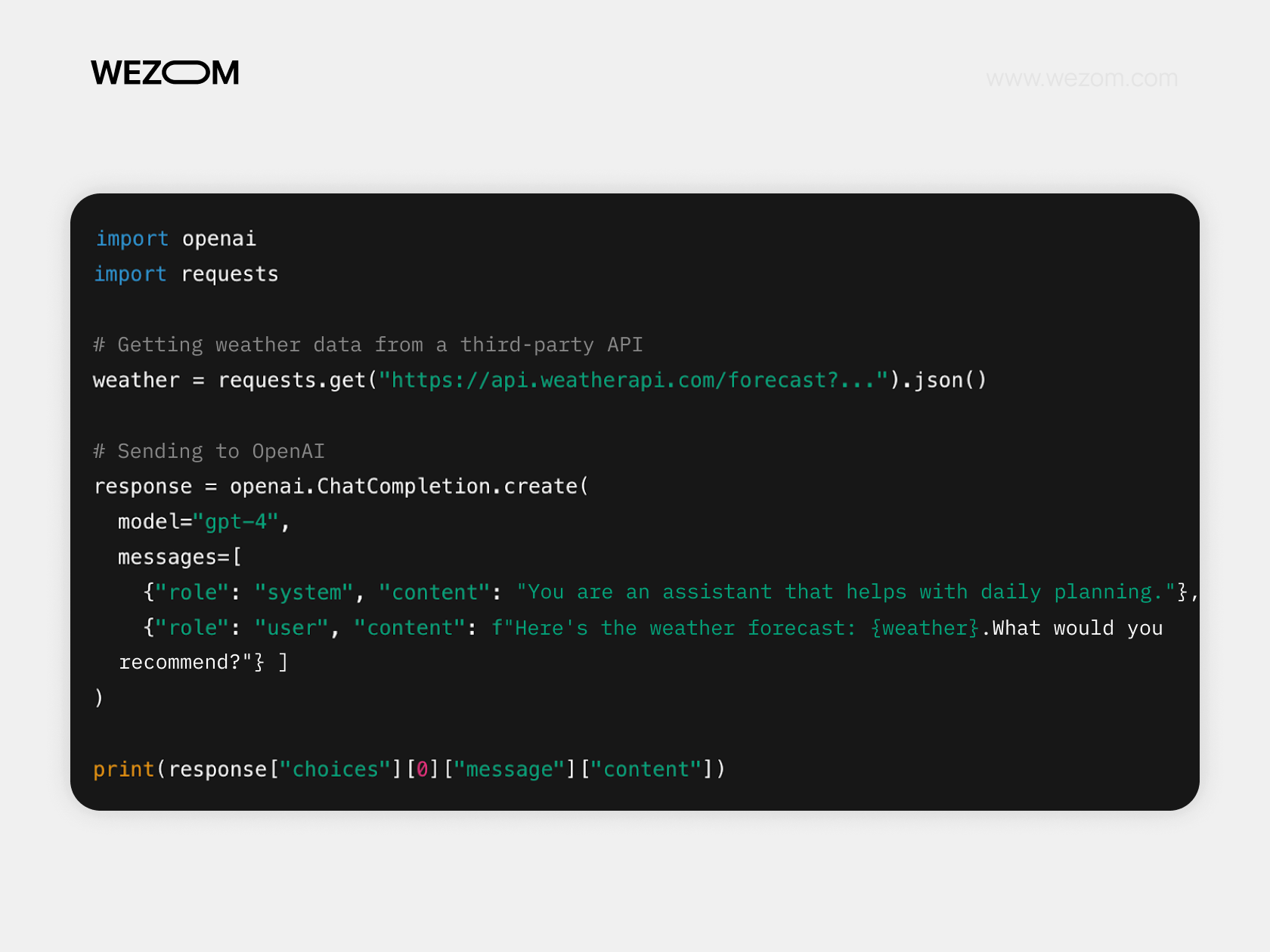

For advanced use cases, connect OpenAI directly with any third-party API — for example, to fetch weather, news, or client data, process it via GPT, and output the result to the desired app. Tools like LangChain, Retool, and Make are also useful for flexible logic and visual workflows.

Security, Compliance, and Ethical Use of OpenAI

If you’re looking to integrate AI into your product or automate workflows, start with the official OpenAI integration guide. Beyond the technical side, it shows that using AI isn't just about innovation, it's also about responsibility.

This section explains how to work with OpenAI in a secure, legal, and ethical way — minimizing risks for both users and businesses.

Privacy issue #1: When you send a request to OpenAI, the model processes your text. But what if it includes personal data, trade secrets, or other sensitive info?

Solution: Don’t pass personal data (like phone numbers, passport details, medical records) via the API. For example, if you're automating complaint processing, strip names and contacts before submission.

Use anonymization. Say you have a review: “Hannah N. from New York said order #12345 arrived damaged.” Before sending, change it to: “A customer from a major city reported that product X was damaged.”

Tip! Explore the OpenAI API, and for corporate use, consider the Enterprise plan — it includes strict confidentiality terms.

Three golden rules for responsible API use:

- Transparency

Users should know they’re interacting with AI. Include a disclaimer like: “This chatbot is AI-powered. Responses may be inaccurate.” - Quality control

Regularly check that the model does not generate harmful or false content. - Legal compliance

In the EU, that’s GDPR; in the US — COPPA. Remove personal data from logs.

The OpenAI tutorial stresses that developers (not the platform) are responsible for how AI is used — including any potential harm. So, while implementing the best tools to use with the OpenAI API, remember: AI should benefit society, and must not reinforce bias or injustice.

Troubleshooting Common OpenAI API Issues

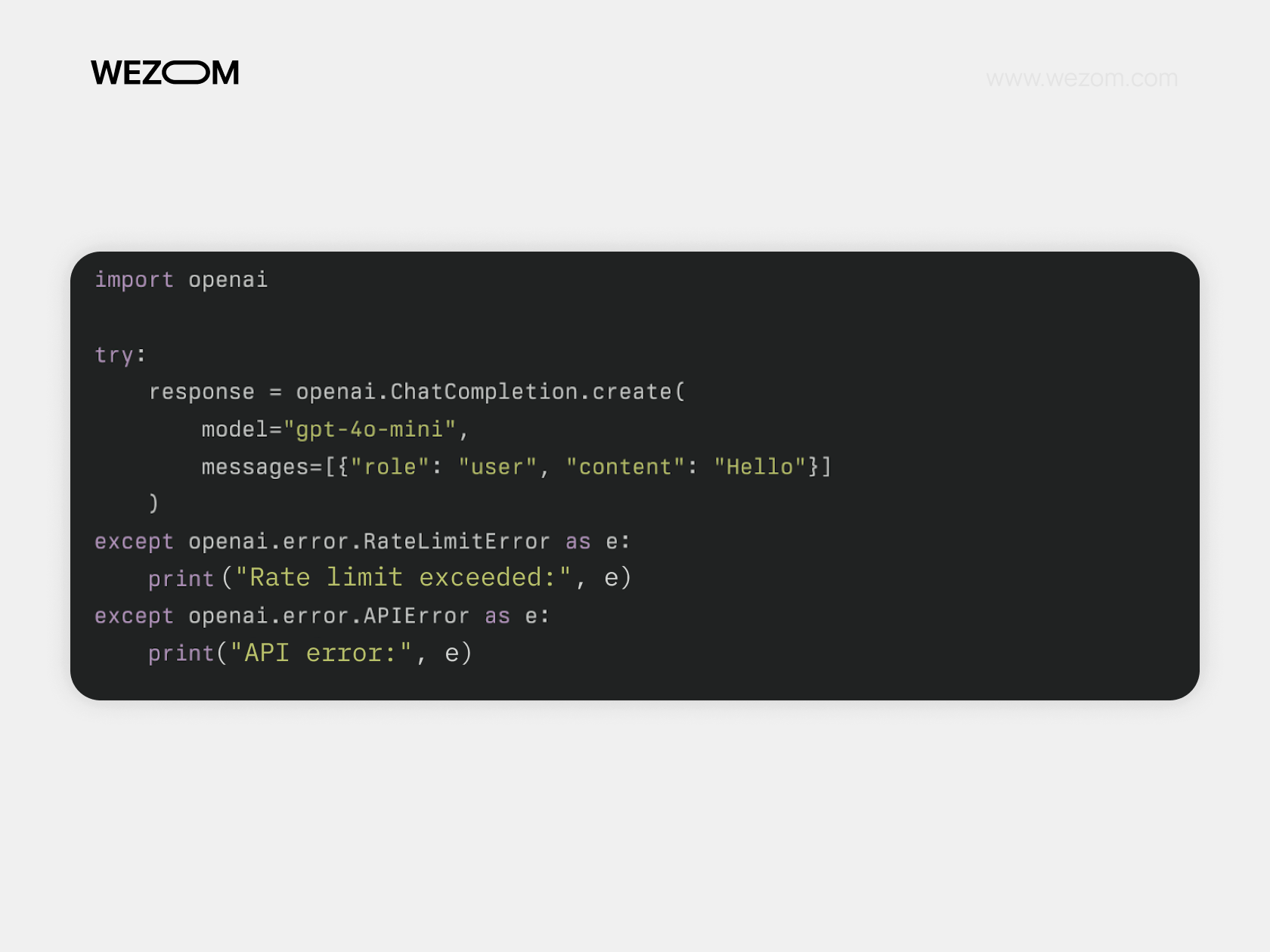

Most OpenAI API usage examples show only the “working” side of things, overlooking the fact that implementation and operation can come with their own set of issues. Here are key tips:

- Check error codes: the API provides detailed messages, for instance, 401 (invalid or missing API key), 400 (incorrect parameters), 429 (rate limit exceeded).

- If no response is received within the timeout period, check the OpenAI status page and make sure your network is stable.

- Use try-except blocks to catch and handle errors, for instance, retry a request during temporary failures or rate limits.

Common invalid request issues and solutions:

- Invalid API key: ensure it’s current and correctly formatted.

- Parameter errors: e.g., incorrect message array structure, values out of range (like temperature > 2.0), token limit exceeded, or invalid JSON.

- Data validation: make sure all required fields are present and correctly filled in. Use JSON validators to check the request body.

Use Postman or curl to test requests outside of your application, this helps isolate issues.

If the issue persists and the OpenAI API developer guide no longer helps:

- Contact official support — it includes the model name, error code, request body, time, and time zone.

- Ask questions and share experiences on the Community Forum (avoid posting sensitive data).

- Submit a request to the OpenAI Support Center and check the docs.

Future of OpenAI API and Ongoing Updates

OpenAI continues to evolve its API. In 2025, new models: GPT-4.1, mini, and nano were released. These are faster, cheaper, and better at handling long texts and programming tasks. The Responses API now includes background processing for complex tasks, auto-summarization of model reasoning, and the ability to safely reuse reasoning objects without storing data on OpenAI servers. Image generation/editing tools have expanded, and fine-tuning models for business needs has become even more flexible.

What to expect in the near future?

- GPT-5: even more accurate and “intelligent” responses

- Better multimodality: e.g., processing text, images, and video simultaneously

- Lower API costs for broader adoption. P.S.: That’s not a forecast: just a hopeful wish!

To stay current and make the most of the OpenAI API, keep up with updates and announcements — follow our blog and stay in the loop!