Once upon a time, software testing was like detective work: an engineer manually searched for every clue that could lead them to the culprit - bugs in the code. It was a slow but necessary process.

Then came automation: tools like Selenium and JUnit allowed engineers to write test scripts that ran automatically. However, even automated testing required manual test creation, updates, and result analysis.

Eventually, we realized that traditional QA tools for testing were no longer keeping up with the growing scale and complexity of modern digital products. The next necessary step in the evolution of testing processes was AI testing.

What is AI Testing?

AI in software testing refers to the use of machine learning, big data, and analytics to create, execute, and improve tests without constant human intervention.

In other words, artificial intelligence does more than just follow commands—it learns and adapts. AI testing tools analyze data, predict vulnerabilities, and independently create test scenarios. They can process unstructured data (such as logs or user actions) and detect patterns that humans or regular scripts might overlook.

This approach has revolutionized the QA industry, allowing teams to focus on strategy rather than endless bug fixing.

How We Use AI to Transform Software Testing

At Wezom, we’ve been closely following the evolution of AI and devops services in software testing. And let’s be honest, AI-based testing – it’s no longer just a buzzword. AI is making a real impact, changing how we create, execute, and manage test cases. Through our experience integrating AI tools for testing like AIO Tests and leveraging OpenAI’s API, we’ve seen firsthand how AI enhances the QA process. Let’s dive into the practical applications, challenges, and best practices we’ve discovered along the way.

Key Takeaways: What We’ve Learned from AI in QA

Through hands-on experience, we’ve identified some critical insights about Artificial Intelligence in software testing:

- AI-driven test case generation reduces manual effort and speeds up test planning.

- Smart automation tools improve test coverage, finding issues that manual testers might miss.

- AI for QA testing works best when combined with human oversight – it’s a powerful assistant, not a replacement.

- The right integration can streamline workflows without adding unnecessary complexity.

Now, let’s explore how AI fits into different aspects of software testing and how you can apply it in your QA strategy.

How AI Improves Testing Processes

Software testing using AI is like replacing a stick with a stone tied to it with a chainsaw. There are numerous advantages, unique features, and implicit benefits. Let's take a closer look at each aspect.

Boosting Efficiency and Productivity with AI

One of the biggest challenges in QA is the time-consuming nature of manual test case creation. Traditionally, testers spend hours writing detailed test scenarios, reviewing requirements, and mapping out edge cases.

When we integrated AIO Tests in Jira, we immediately saw a shift. The AI-powered engine analyzes user stories, extracts key details, and automatically generates structured test cases. The benefits were clear:

✔️ Test case creation time reduced by 50% – from hours to minutes.

✔️ More comprehensive coverage – AI identifies missing test scenarios.

✔️ Less human error – AI ensures consistency across test cases.

Of course, we still review and fine-tune the AI-generated tests. The key is finding the right balance between automation and manual QA expertise.

Additionally, a well-chosen AI testing framework can "read" and analyze user stories, technical requirements, and documentation to automatically create test scenarios. This is especially useful in Agile environments, where requirements often change.

Optimizing Test Coverage and Reducing Gaps in Testing

The second key challenge that AI software testing addresses effectively is ensuring full test coverage. This is particularly important in complex systems with many scenarios. Often, traditional approaches leave gaps, leading to critical bugs being discovered only in production.

After implementing AI for testing, significant improvements can be seen:

✔️ Up to 40% more coverage – Artificial Intelligence in testing can analyze the codebase and automatically suggest tests for missed scenarios.

✔️ Optimizing regression testing – AI determines which tests need to be rerun and which are no longer relevant.

✔️ Minimizing redundant processes – redundant checks are eliminated, reducing the time required to execute tests.

AI in QA testing can, in most cases, replace an experienced analyst: the system independently finds hidden risks and helps focus on the most important issues.

Minimizing False Alarms and Accelerating Results Analysis

AI testing automation eliminates the shortcomings of "old-generation methods." One of the most annoying problems was the huge number of false alarms (when the system reports an error that doesn’t actually exist).

With Artificial Intelligence in test automation, it’s possible to reduce the number of false alarms by up to 60%. The algorithm learns from the history of tests and filters out unstable checks.

Moreover, automatic bug prioritization becomes available – the system understands which errors are critical and which can wait.

Since QA AI tools analyze logs, code, and even past fixes, suggesting possible causes of issues, we now have faster fault diagnosis. At the very least, developers no longer waste time determining whether it's a bug or not and can immediately begin fixing real issues.

Security and Compliance: AI as a Safety Net

AI-generated test cases don’t just increase efficiency – they enhance test accuracy and help meet compliance requirements. In industries like healthcare, fintech, and e-commerce, security is a top priority.

Using Artificial Intelligence software testing, we:

✔️ Automatically identify security vulnerabilities through intelligent pattern detection.

✔️ Ensure compliance with ISO 9001, ISTQB, and GDPR.

✔️ Reduce the risk of human oversight in critical workflows.

This means fewer missed test cases and a higher level of confidence in our testing strategy.

Powerful Predictive Analysis, Failure Forecasting, and Boundary Case Detection

The usual testing process is simple: you find a bug first, then fix it. But what if you could detect potential failures before they become problems?

AI-based test automation tools can be used even at the code writing stage: the system analyzes commits and finds patterns that led to failures in the past.

Additionally, trained algorithms can identify unstable modules, i.e., assess the risk level in different parts of the project (and based on this, determine which areas require more attention).

In our calculations, AI-driven testing allows us to reduce the number of bugs in production by at least 30% through predictive analysis. As a result, it’s an excellent tool that allows the QA team to act proactively rather than reactively.

A New Approach to Quality Assurance

There are several other aspects where testing AI shows fantastic results. One of these is improving collaboration between teams (analytics in an understandable format enhances communication between developers, testers, and managers).

AI in test automation helps reduce costs for repetitive tasks. Less resources are spent on creating and maintaining tests, which leads to fewer errors and lower costs for fixing them. As a result, teams can release products faster, increasing profitability.

"Don’t forget about adaptability to change. AI-driven test automation allows for quick adaptation to changes in requirements or code, which is especially important in Agile and DevOps environments. As a result, tests are automatically updated when functionality changes. This gives us the ability to quickly test new features without delays. Teams can react faster to user feedback.”

© Lead QA Engineer, Wezom

These benefits demonstrate how AI testing software not only improves testing processes but also transforms the approach to quality assurance, making it more intelligent, faster, and more reliable.

Best Practices for Integrating AI into Your Testing Strategy

Before diving into the vast pool of AI automation testing tools - purchasing, installing, and configuring all available options - it’s crucial to define the key objectives. Three basic questions can help guide you:

- Where does testing consume the most time?

- What types of bugs most frequently slip past testers?

- Which processes can be automated without losing control?

For example, our goal was to gain the following capabilities:

- Code analysis and predicting potential defects before testing.

- Test case generation and automatic coverage of new features.

- Optimizing regression testing to avoid running unnecessary tests.

- Quick analysis of test results and identification of false alarms.

How We Use OpenAI’s API for Smarter Test Cases

At Wezom, we don’t just rely on built-in AI features – we take it a step further. By integrating OpenAI’s API into our testing framework, we’ve enhanced our ability to:

Analyze user stories and extract key functional requirements.

Generate test case steps based on real-world user behaviors.

Predict potential failure points by learning from past defect patterns.

The result? Smarter, context-aware test cases that align closely with business goals and user expectations.

How to Choose the Right AI Testing Tools?

The market offers dozens of AI test automation solutions, but not all of them are equally useful. It's important to consider the scale of the project, the technology stack, and the testing goals.

To start, we recommend evaluating the tool’s functionality. Specifically, consider whether it supports various types of AI and testing (functional, regression, load, security testing, etc.) and its scalability.

The tool should also be user-friendly for your team. Pay attention to:

- Interface: Is it intuitive? Does it require extensive training?

- Documentation and Support: Are there detailed guides, video tutorials, and responsive support?

- Flexibility: can using AI in software testing be customized to suit the specific needs of your project?

|

Tip! The key isn’t the tool itself, but how it’s adapted to business processes. If the chosen solution doesn’t integrate with CI/CD or requires complex setup, your team simply won’t use it. Choose a solution that not only addresses your current needs but also helps your team grow and adapt to future challenges. |

.jpg)

If you need test case generation, Applitools, Test.ai, or Functionize are good choices.

For bug prediction, Mabl or Diffblue Analyze will be more suitable.

For automated log analysis and anomaly detection, consider Selenium AI or Testim.

For visual testing and UX control, Applitools Eyes is the right tool.

Team Training: The Key to Effectively Using Automation Tools for QA

Any technology operates at its full potential only when people understand how to use it. One common mistake companies make is implementing AI for software testing without proper team training.

For AI testing to deliver real benefits, you need to:

✔️ Train QA engineers to work with algorithms (analyze AI-generated suggestions, adjust automatically generated tests).

✔️ Teach developers to consider reports during code reviews.

✔️ Create guidelines for using tools to lower the entry threshold for new team members.

Top companies conduct workshops, test sprints, and demos so that the team can see the real benefits of AI in QA in action.

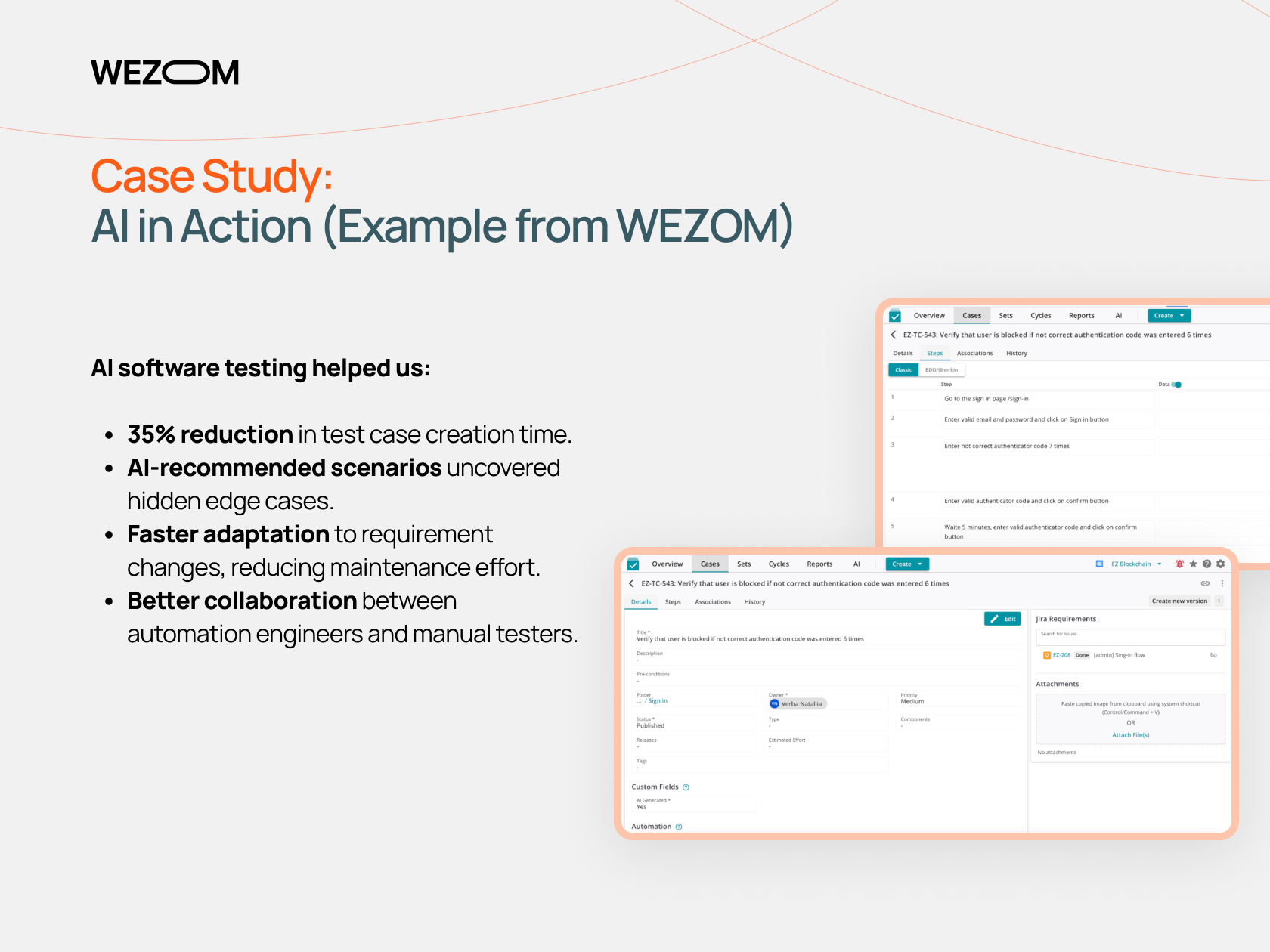

Case Study: AI in Action (Example from WEZOM)

Project: AI-Powered Web Platform Testing

Task: Our goal was to accelerate the testing of a large web platform, improve the accuracy of test cases, and reduce maintenance costs.

Manual testing was taking too much time, and automation required constant updates due to frequent requirement changes. We needed a solution that would not only automate the process but also make it more intelligent.

Solution: We integrated AIO Tests – an AI-based solution capable of analyzing requirements, automatically generating test cases, and identifying weak spots in test coverage.

AI software testing helped us:

- 35% reduction in test case creation time.

- AI-recommended scenarios uncovered hidden edge cases.

- Faster adaptation to requirement changes, reducing maintenance effort.

- Better collaboration between automation engineers and manual testers.

Results

- Less routine, more efficiency – testers focused on bug analysis, not test case creation.

- Reduced maintenance time – tests didn’t "break" after updates because AI systems automatically adapted them to changes.

- Higher product quality – thanks to smart scenario generation, we covered 20% of new test cases we hadn’t considered before.

Addressing Common Misconceptions About AI in Testing

According to Capgemini, in the next 2 years, 85% of software developers will switch to AI testing tools. However, despite this, there are many myths surrounding this technology that hinder its full implementation. Let's address the most common misconceptions.

AI Augments, Not Replaces, Test Engineers

A common misconception we’ve encountered is the fear that AI will replace test engineers. The reality? AI is a tool, not a substitute for human expertise.

Here’s how we use AI to enhance rather than replace our QA teams:

✔️ AI handles repetitive tasks like test case generation and maintenance.

✔️ Testers focus on exploratory testing, user experience validation, and risk assessment.

✔️ AI and human testers work together to improve test coverage and accuracy.

By adopting this approach, we’ve increased our team’s productivity without reducing the value of human insight in testing.

Is implementing artificial intelligence difficult and expensive?

Previously, developing and configuring AI solutions required significant resources. Today, many tools (such as Testim, Applitools, Mabl) work "out of the box" and integrate easily into CI/CD pipelines. You can start with simple features (like automatic test case generation, log analysis) and gradually expand their usage.

Moreover, research shows that the implementation of smart testing quickly pays off through reduced regression time and improved product quality.

Algorithms can make mistakes, but less often than humans.

The reality is that artificial intelligence reduces the number of errors but still requires oversight.

Yes, algorithms can produce false alarms or suggest inappropriate test cases. But compared to human errors, AI offers much greater accuracy and test coverage. The key is to properly configure the tools and verify their performance, rather than relying on them blindly.

AI for regular testers doesn’t require being a Data Scientist.

Many people believe that working with AI in testing requires deep knowledge of machine learning. However, new AI solutions are designed for regular QA teams and have intuitive interfaces. Our experience shows that training to use such tools takes just a few days, not months.

FAQs: AI Testing Questions Answered

How is AI applied in automated testing?

AI analyzes user story text, generates structured test cases, and predicts potential failure points. It can also optimize test execution, prioritize scenarios, and suggest missing test coverage.

Can AI replace automated testing?

No. AI enhances test automation but does not eliminate the need for human oversight. Engineers are still essential for test validation, exploratory testing, and complex scenario handling.

AI is excellent at handling routine tasks: regression testing, log analysis, and bug prediction. However, there are aspects that require manual verification: exploratory testing, user experience testing, and handling non-standard scenarios.

Even in the most advanced projects, AI tools for testing work in tandem with QA engineers, helping to optimize testing, but not completely replacing it.

How can we integrate AI-powered testing tools into our workflow?

- Identify areas where AI can reduce manual effort (e.g., test case creation, bug prediction).

- Choose AI-enhanced tools like AIO Tests, Applitools, or Functionize.

- Train QA teams to leverage AI effectively while maintaining quality control.

Is AI testing always necessary for software?

Sometimes manual testing is indispensable. For example, when evaluating how user-friendly and intuitive an application is. AI cannot fully understand human emotions and preferences.

Artificial intelligence might miss visual defects, such as improper element placement, color errors, or font issues. Manual testing is more reliable in these cases.

What are the advantages of AI testing?

- Speed: Automation speeds up test execution.

- Accuracy: AI reduces human errors.

- Resource savings: Cuts costs on routine tasks.

- Proactivity: Predicts issues before they arise.

- Scalability: Suitable for large projects and teams.

What are the limitations of AI testing?

- Algorithms require high-quality data for training.

- Tool implementation can be labor-intensive.

- Some technologies may be extremely expensive.

Final Thoughts: AI is Here to Stay

AI is no longer a futuristic concept in QA – it’s already transforming how we approach software testing. From smart test case generation to predictive analysis, AI-powered tools help teams work faster, smarter, and more efficiently.

At Wezom, we’re embracing AI to improve our QA workflows while ensuring human testers remain at the center of the process. If you’re considering AI for your testing strategy, start small, experiment, and adapt – because AI in QA is only going to get better from here!

💡 Want to explore AI-powered testing solutions? Let’s chat about how AI can optimize your QA strategy! 🚀