According to IDC, the volume of generated data will reach 175 zettabytes by 2025. That's equivalent to every person on Earth continuously streaming video in 4K quality for four months straight.

According to a study by NewVantage Partners, 92% of Fortune 1000 companies are increasing their investments in big data and artificial intelligence. However, here's the paradox: only 24% of them have succeeded in becoming truly data-driven success organizations.

Why does this happen? Because collecting data is one thing, but turning it into real business results is something entirely different. It's like having the most advanced navigation system but not knowing how to use it—you can still lose your way.

In this article, we will explore how to build an effective big data strategy, avoid common mistakes, and transform the data deluge into a source of competitive advantage. Whether you're managing a small startup or an international corporation, understanding these principles could be the decisive factor in the race for market leadership.

Understanding the Core of Big Data Strategy

Big Data refers to datasets characterized by their volume, variety, and high velocity of generation. However, the sheer amount of data is meaningless if it’s not put to use.

Imagine a massive Amazon warehouse. Each box represents a piece of information. Every minute, transformations occur: one box is taken for delivery, another replaces it with a new product. A shipment arrives. A bulk order needs to be processed. It’s an overwhelming amount of data, far too much to manage with traditional methods.

Millions of data points without a system are just useless noise. That’s why we need a strategy to structure the information and uncover valuable insights within it.

Big Data finds applications in nearly every aspect of our lives: from medicine to finance, from the entertainment industry to decision-making in public policy.

Objectives of Big Data Strategy Consulting Services

According to a Gartner report, companies that implement Big Data strategies adapt to market changes 23% faster than their competitors. But this is far from the only reason why every company should consider adopting a Big Data strategy.

- Improvement of data-driven decision making through more accurate forecasting.

- Reduction of production, operational, and financial risks. For example, General Electric uses sensor data to predict equipment failures, which reduced unplanned downtime by 45% and saved $50 million annually.

- Ability to respond more quickly to market changes and adapt to new conditions. For example, implementing dynamic pricing or adjusting a content strategy.

- Automation of processes. Example from logistics: DHL uses Big Data to optimize delivery routes, reducing fuel costs by 15% and delivery times by 25%.

- Cost reduction and efficiency improvement. Example from agriculture: John Deere uses IoT sensors and Big Data for precision farming, which increases yields by 30% while reducing fertilizer usage by 20%.

- Improvement of customer experience: personalization of products and services, predictive maintenance, and enhanced quality of support. Example from the automotive industry: Tesla uses telemetry data to warn owners about necessary maintenance before issues arise, reducing unplanned repairs by 35%.

- Creation of new revenue streams: entering new markets and monetizing data. Example from media: Bloomberg monetizes financial data through an API, generating more than $10 billion in annual revenue.

- Acceleration of innovation and optimization of Research and Development (R&D). Example from consumer goods: P&G analyzes social media data to identify trends and develop new products, increasing the success rate of launches by 25%.

According to a Deloitte study, 49% of companies state that business intelligence with Big Data helps them gain a competitive advantage. Big Data offers truly countless opportunities, but without professional assistance, its potential may remain unrealized.

Crucial Elements in Building a Robust Big Data Strategy

Implementing Data Strategies is a clearly structured plan that allows a business to collect, integrate, analyze, and use data to achieve specific goals. For example, predicting demand, optimizing inventory, and personalizing advertising campaigns. Detecting fraudulent activities, assessing risks, and developing new products that the market needs. Let’s take a detailed look, with practical recommendations, at the key components of Big Data.

Optimizing Big Data Collection and Integration

A modern organization has numerous data sources:

- CRM systems

- Social media

- Call center conversation recordings

- IoT devices

- Transactional systems

- Various databases

- External sources, etc.

As a result, there are two main questions we face: "How can we automate and simplify this process?" and "How can we standardize all the data we receive?"

Each of these types of data requires a specific approach to collection, storage, and processing. For example: semi-structured data is often handled using NoSQL solutions. Real-time data needs to be processed with minimal latency. Geospatial data requires specialized spatial databases, and so on.

Optimization begins with an audit. You need to inventory the sources, assess their quality (relevance and consistency), and prioritize them. Identify the critical data for the business, assess the integration cost of each source, and create an integration roadmap.

When it comes to technical recommendations, our best Big Data consultants advise starting by creating templates for new sources and developing validation protocols. Then, set up automation for data collection: use APIs instead of manual exports, implement data collection monitoring systems, and establish automatic notifications for issues.

For performance optimization: use incremental loading, implement parallel processing, and optimize queries to sources.

Tip! Choose a scalable microservice architecture. Form a team of data specialists (define roles and levels of responsibility). Monitor success metrics: reduce report preparation time, improve the quality of analytics, and measure ROI from data projects.

Ensuring Data Governance and Security in Big Data Strategies

As the volume of data increases, so do the risks: leaks, theft, or improper use. Data management includes:

- Setting up access controls

- Encryption

- Compliance with legal standards, such as GDPR or CCPA

According to confidentiality levels, data is divided into public, internal, confidential, and strictly confidential categories. It is crucial to allocate roles within teams appropriately, ensuring that each participant receives the necessary level of access while preventing them from accessing databases containing sensitive information for senior management.

From a technical standpoint, it is essential to ensure optimal encryption both at rest and during transmission (in transit). For example, using TLS 1.3 for network communications, end-to-end encryption for critical data, and AES-256 for stored data ensures robust security across the entire data lifecycle, following the best practices of AES data encryption for enterprise-grade protection.

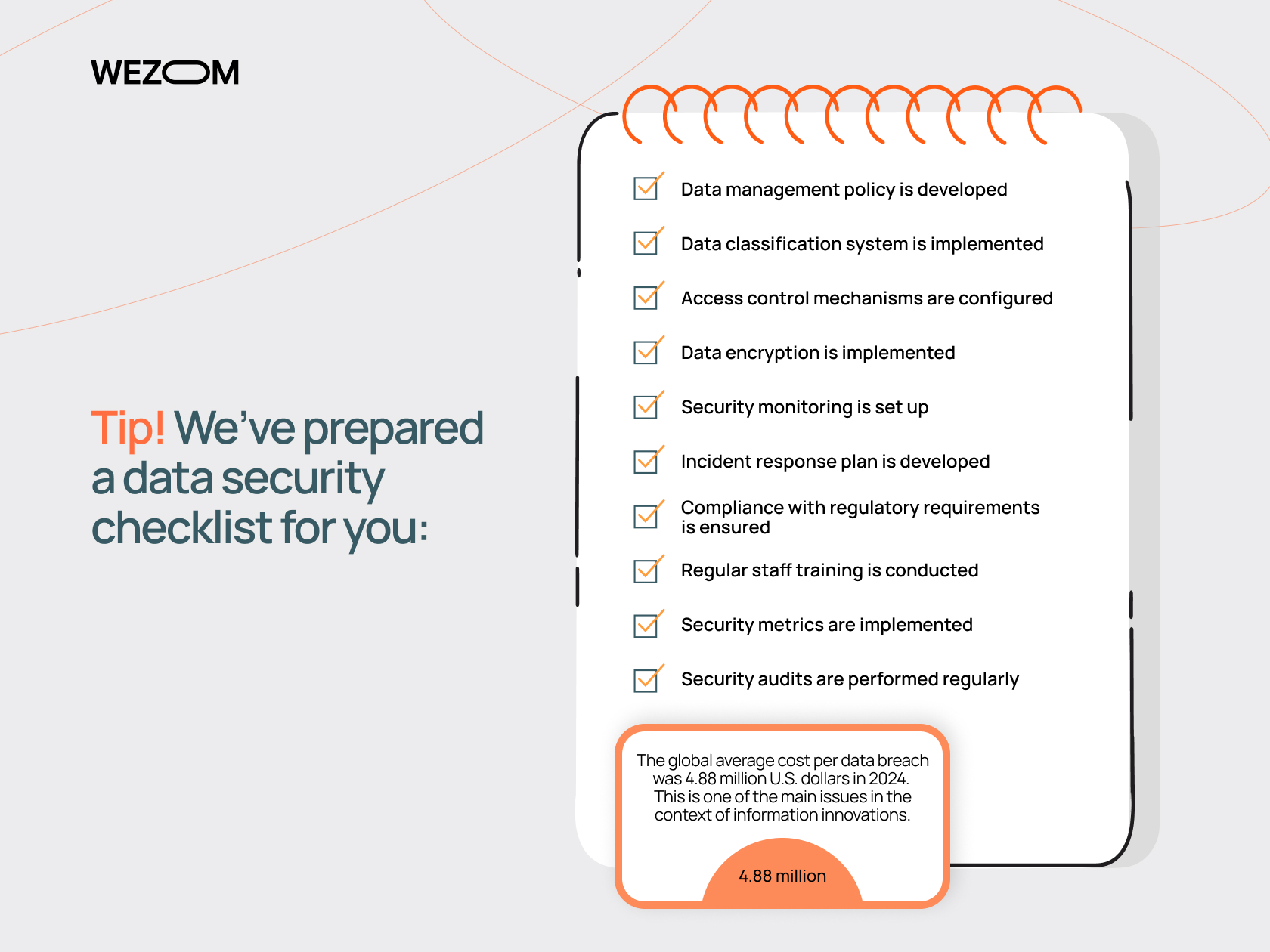

Tip! We’ve prepared a data security checklist for you:

✓ Data management policy is developed

✓ Data classification system is implemented

✓ Access control mechanisms are configured

✓ Data encryption is implemented

✓ Security monitoring is set up

✓ Incident response plan is developed

✓ Compliance with regulatory requirements is ensured

✓ Regular staff training is conducted

✓ Security metrics are implemented

✓ Security audits are performed regularly

The global average cost per data breach was 4.88 million U.S. dollars in 2024. This is one of the main issues in the context of information innovations.

Leveraging Big Data Analytics for Actionable Insights

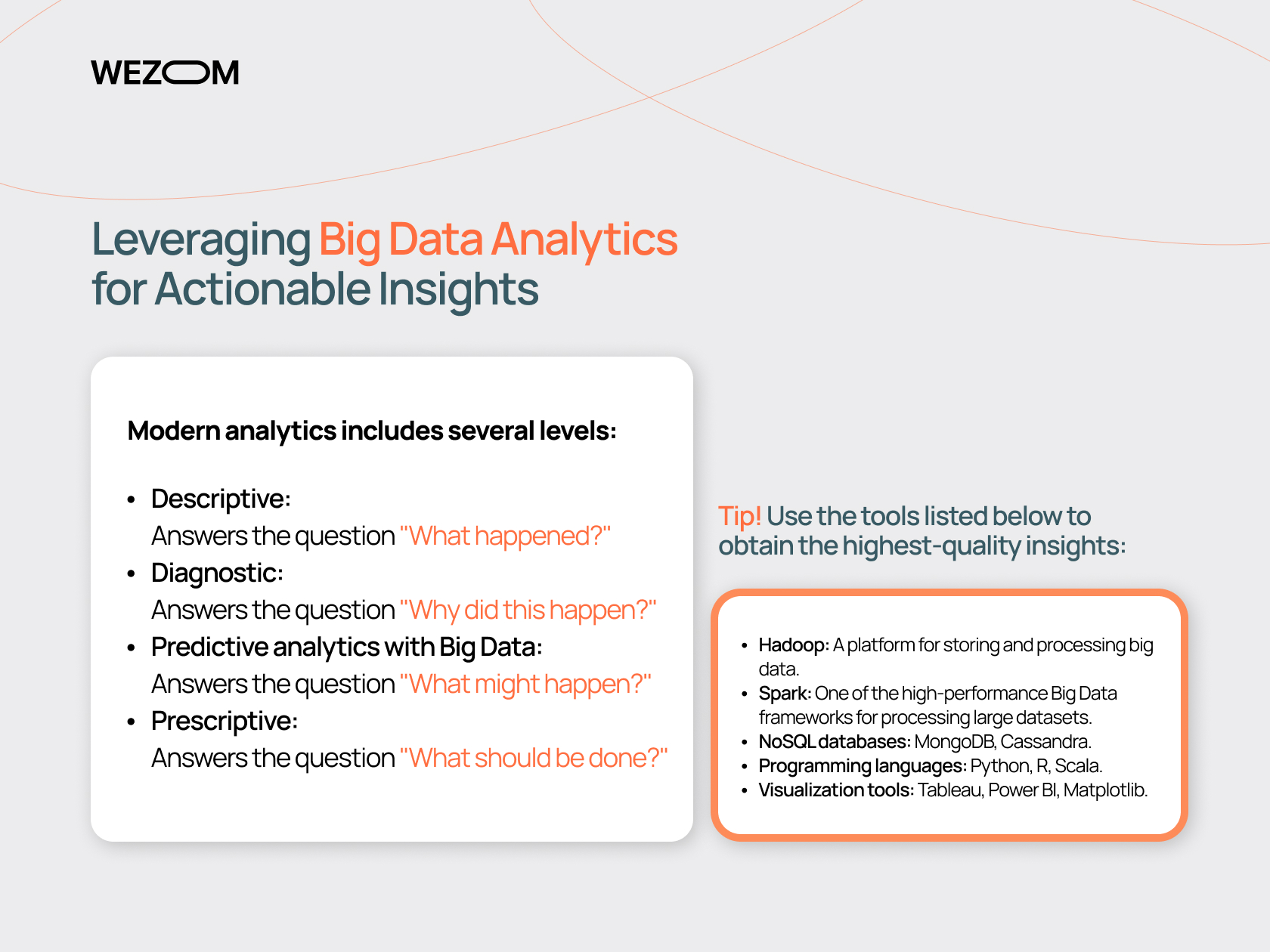

Analytics is the process of transforming raw data into actionable insights. It's like a distillation process: from a large volume of raw material, a concentrated product is obtained that contains the most important information.

Modern analytics includes several levels:

- Descriptive: Answers the question "What happened?"

- Diagnostic: Answers the question "Why did this happen?"

- Predictive analytics with Big Data: Answers the question "What might happen?"

- Prescriptive: Answers the question "What should be done?"

Tip! Use the tools listed below to obtain the highest-quality insights:

- Hadoop: A platform for storing and processing big data.

- Spark: One of the high-performance Big Data frameworks for processing large datasets.

- NoSQL databases: MongoDB, Cassandra.

- Programming languages: Python, R, Scala.

- Visualization tools: Tableau, Power BI, Matplotlib.

Building Strong Data Architecture and Infrastructure for Big Data

A well-designed architecture ensures efficient storage, processing, and analysis of large volumes of data, as well as guarantees their availability and security.

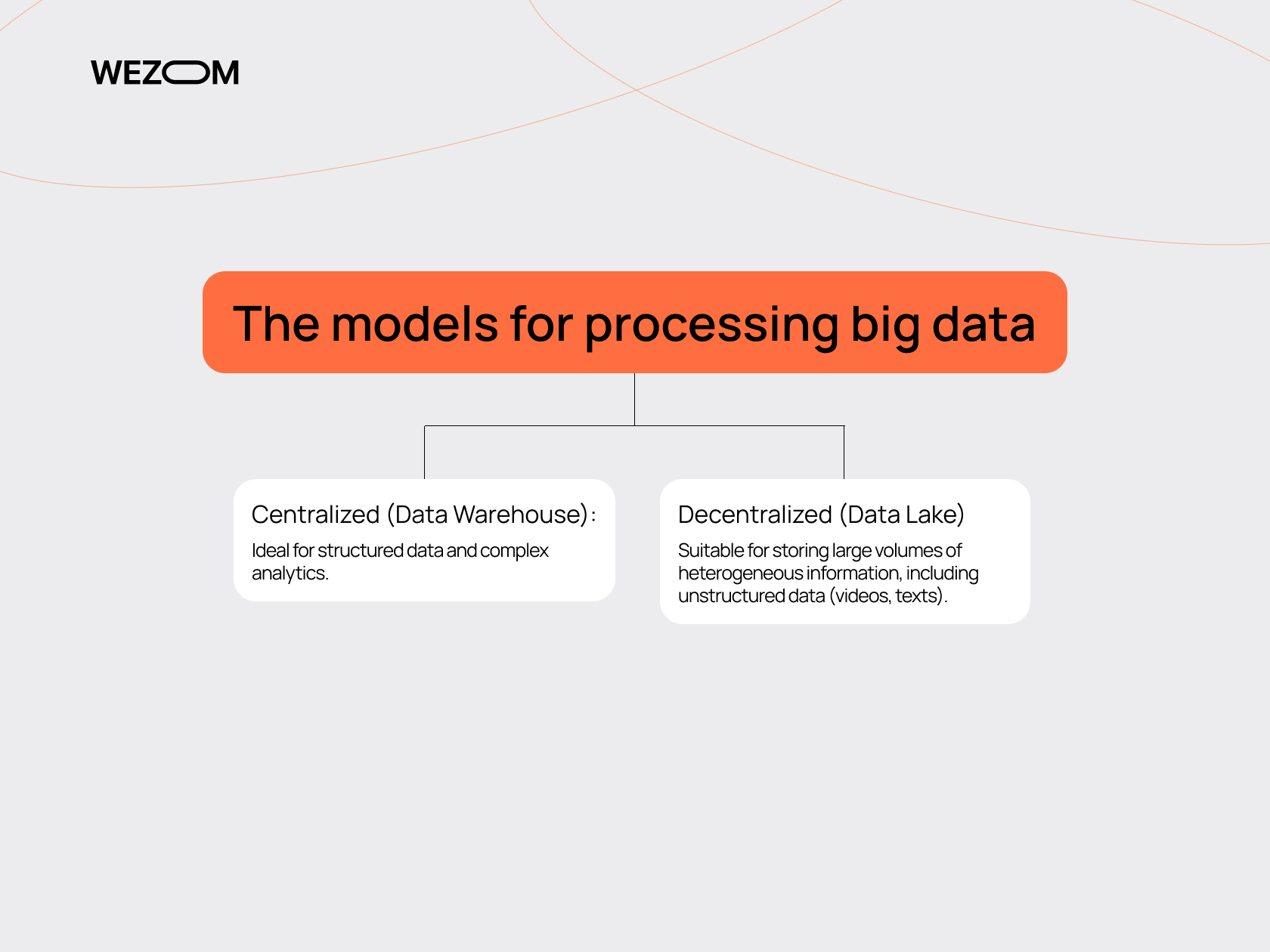

The two most commonly used models for processing big data are:

- Centralized (Data Warehouse): Ideal for structured data and complex analytics.

- Decentralized (Data Lake): Suitable for storing large volumes of heterogeneous information, including unstructured data (videos, texts).

Tip! You don't have to choose just one approach. Many companies use a hybrid strategy, combining both Data Warehouse and Data Lake. This allows for flexible processing of different types of information and adapting the architecture to various business goals.

Additionally, working with big data requires specialized tools. Data storage solutions (such as Apache Hadoop or Google BigQuery), as well as processing, analytics, and security tools, are essential.

For reliable infrastructure operation, you'll need monitoring tools like Prometheus or Nagios to track server performance.

And it's worth reminding again how important it is to ensure scalability. Cloud-based Big Data solutions for businesses, such as AWS, Google Cloud, or Azure, can help with this. Also, containers and orchestrators like Kubernetes or Docker are helpful for distributing workloads across servers.

Overcoming Obstacles in Implementing Strategies

Big Data strategy implementation comes with many challenges that can slow down or even derail the project. Let's take a look at the most common obstacles and ways to overcome them.

Managing the Three V’s: Volume, Variety, and Velocity in Big Data

Three fundamental characteristics. One of the most challenging obstacles. Often, we face limited storage capacity. The problem of volume is characterized by data accumulating every second and rapidly growing into massive arrays that exceed all limits. Furthermore, any increase in volume either leads to higher storage costs or creates difficulties in subsequent processing.

The simplest, most scalable, and cost-effective solution is to use cloud-based Big Data solutions (storage). Additionally, regular optimization is essential: removing duplicates and archiving outdated information.

Our solution: distributed storage technologies. Apache Hadoop and other systems distribute data across servers, reducing load and improving performance.

For managed diversity, it’s important to configure various sources of information, select the correct Big Data tools and techniques, and automate integration. ETL (Extract, Transform, Load) tools like Talend or Apache Nifi will assist you with this.

Our solution: modern algorithms for processing unstructured data. For example, NLP (Natural Language Processing) for texts and computer vision for images.

The problem of speed is characterized by high processing costs of streams and the desire to ensure minimal latency. In some cases, there is even a risk of data loss due to errors.

The most obvious solution to this problem is to use streaming platforms that allow real-time data processing. Additionally, set up caching systems. For example, Redis or Memcached speed up access to frequently used data sets.

Our solution: edge computing. This approach processes data on devices close to the source of its arrival, reducing latency.

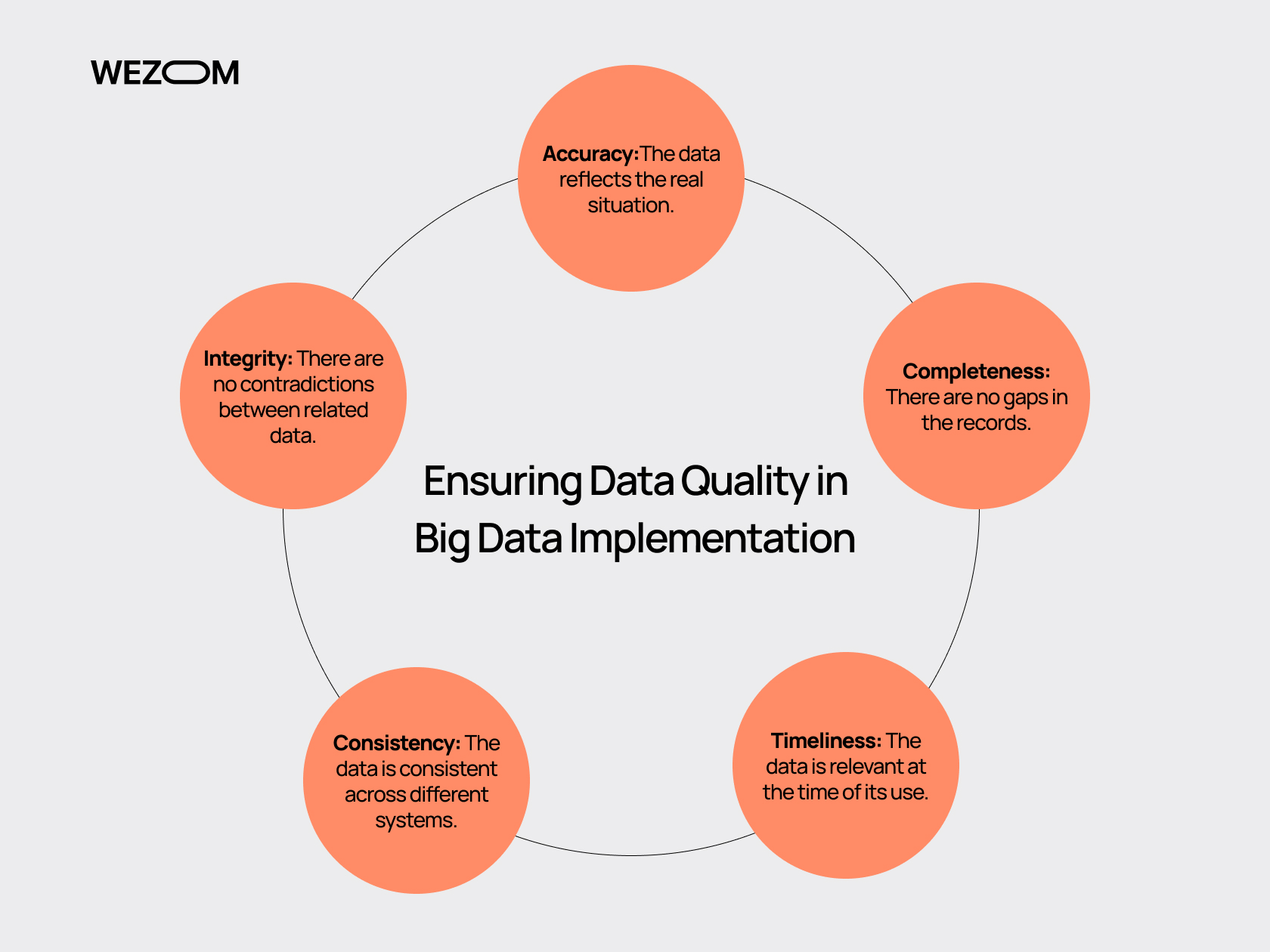

Ensuring Data Quality in Big Data Implementation

There are 5 key characteristics of quality data that you should adhere to:

- Accuracy: The data reflects the real situation.

- Completeness: There are no gaps in the records.

- Timeliness: The data is relevant at the time of its use.

- Consistency: The data is consistent across different systems.

- Integrity: There are no contradictions between related data.

How to achieve this? Through a well-designed Big Data strategy. Start by ensuring data quality at the input level: set up form validation (e.g., email or phone verification). Implement Data Governance (a structure that defines who is responsible for data quality within the company). Create a metadata management system.

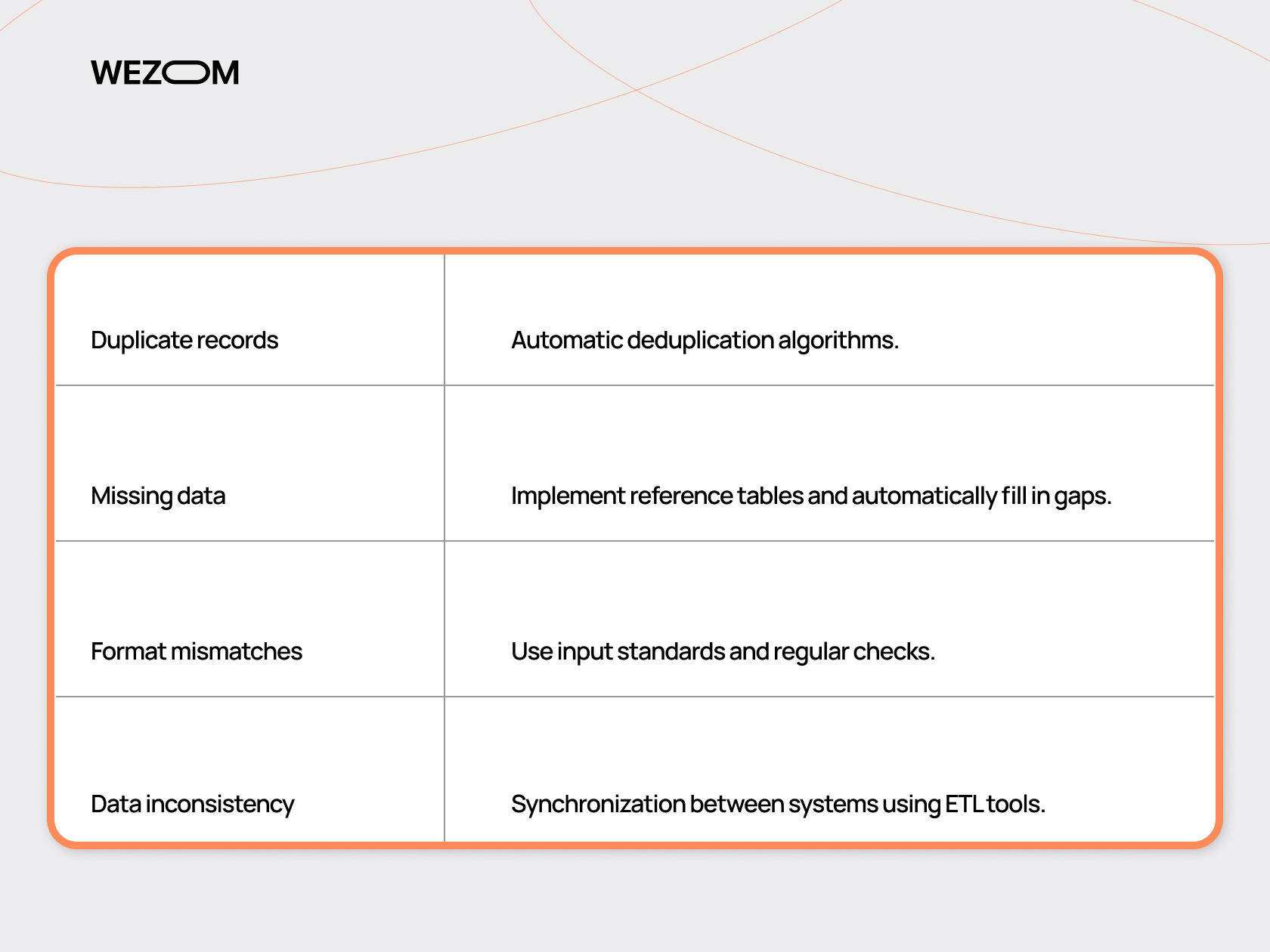

Typical data quality issues and solutions:

Achieving Scalability and Performance in Big Data Strategies

One of the main challenges in this area is the limited computing resources. As data volumes increase, traditional infrastructures start to "struggle" because there is not enough memory, processing power, or disk space.

On the other hand, many companies need real-time data processing (for example, for predictive data analysis consulting or responding to user behavior). This requires high performance, which is difficult to achieve with growing workloads.

Old monolithic systems are often not capable of horizontal scaling (by adding servers), which creates a bottleneck as the business grows. Furthermore, it becomes necessary to integrate new systems and tools, which can create additional complexities.

Our solution: implementing flexible technologies and optimizing processes with modern tools and machine learning.

Navigating Change Management in Big Data Initiatives

Data strategy planning is often accompanied by significant changes in processes, technologies, and corporate culture. These changes can cause employee resistance, challenges in Big Data integration, and a mismatch between business goals and technical capabilities. Change management becomes a key factor for the company's successful transition to working with big data.

Only a systematic approach and involving the team will help your company successfully adapt to new technologies and extract the maximum benefit from big data. It is necessary not only to develop a clear strategy and engage employees but also to ensure transparency in communication and motivate them to embrace the changes. Continuous monitoring and adjustment are recommended.

Our solution: To manage changes in a structured manner, it is essential to apply sound approaches, such as ADKAR (Awareness, Desire, Knowledge, Ability, Reinforcement) or Kotter’s Change Model.

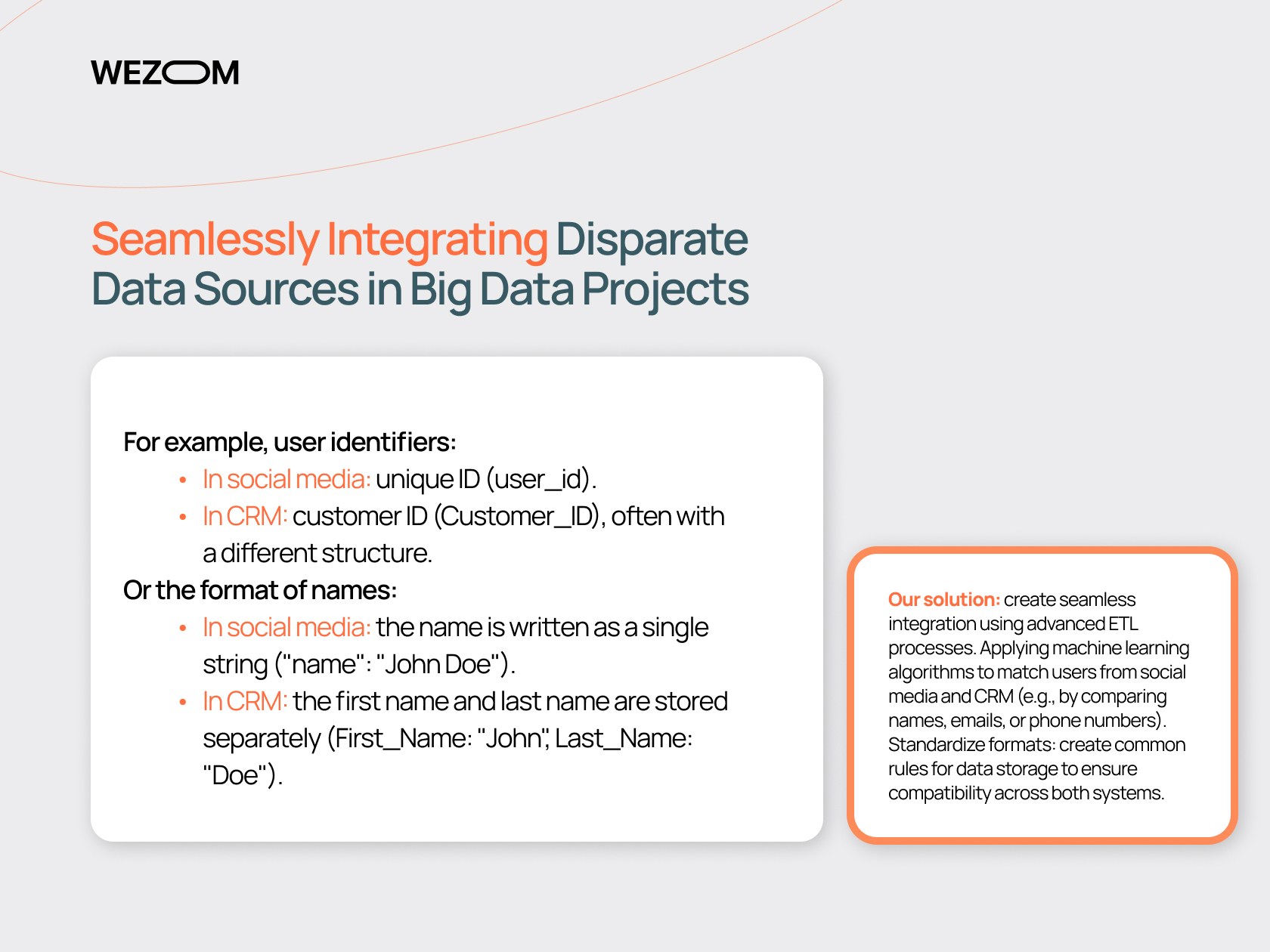

Seamlessly Integrating Disparate Data Sources in Big Data Projects

The problem is that to get a holistic view of the business, all data needs to be unified. Where? How?

Let's take a simple example: information coming from social media often has an unstructured or semi-structured format, while data in CRM systems is usually structured. This can lead to mismatches that require data transformation before integration.

For example, user identifiers:

- In social media: unique ID (user_id).

- In CRM: customer ID (Customer_ID), often with a different structure.

Or the format of names:

- In social media: the name is written as a single string ("name": "John Doe").

- In CRM: the first name and last name are stored separately (First_Name: "John", Last_Name: "Doe").

Our solution: create seamless integration using advanced ETL processes. Applying machine learning algorithms to match users from social media and CRM (e.g., by comparing names, emails, or phone numbers). Standardize formats: create common rules for data storage to ensure compatibility across both systems.

Concluding Insights on Implementing a Successful Big Data Strategy

Successful implementing of Big Data strategy requires:

- A clear understanding of business goals

- Proper selection of technologies and tools

- Creating a data-driven culture

- Continuous learning and team development

- A flexible approach to change management

As practice shows, organizations that use Big Data for competitive advantage truly achieve their goals. According to our statistics, these companies:

- Are 19% more profitable

- Are 23% more successful in attracting new customers

- Retain 26% more existing customers

.jpg)

Ultimately, success in working with Big Data is a marathon, not a sprint. It is an ongoing process of improvement and adaptation to changing market conditions and technological advancements.

If your business is ready to take the next step and become truly "data-driven," seek professional support. Remember, success in working with data starts small: with the right questions and the right decisions.