Do you urgently need to implement Generative AI into your business processes, but your IT department is overwhelmed by the choice between GPT-4, Gemini, and Claude? Along with these models’ specifics, it's also important to consider the nuances of their APIs. In particular, we often see companies spend months on pilot projects that fail when scaling due to unacceptable latency or API inflexibility. The point is, choosing an API isn't about choosing the smartest model; it's about choosing a tech partner whose SLA can handle millions of requests and ensure seamless integration with your legacy software.

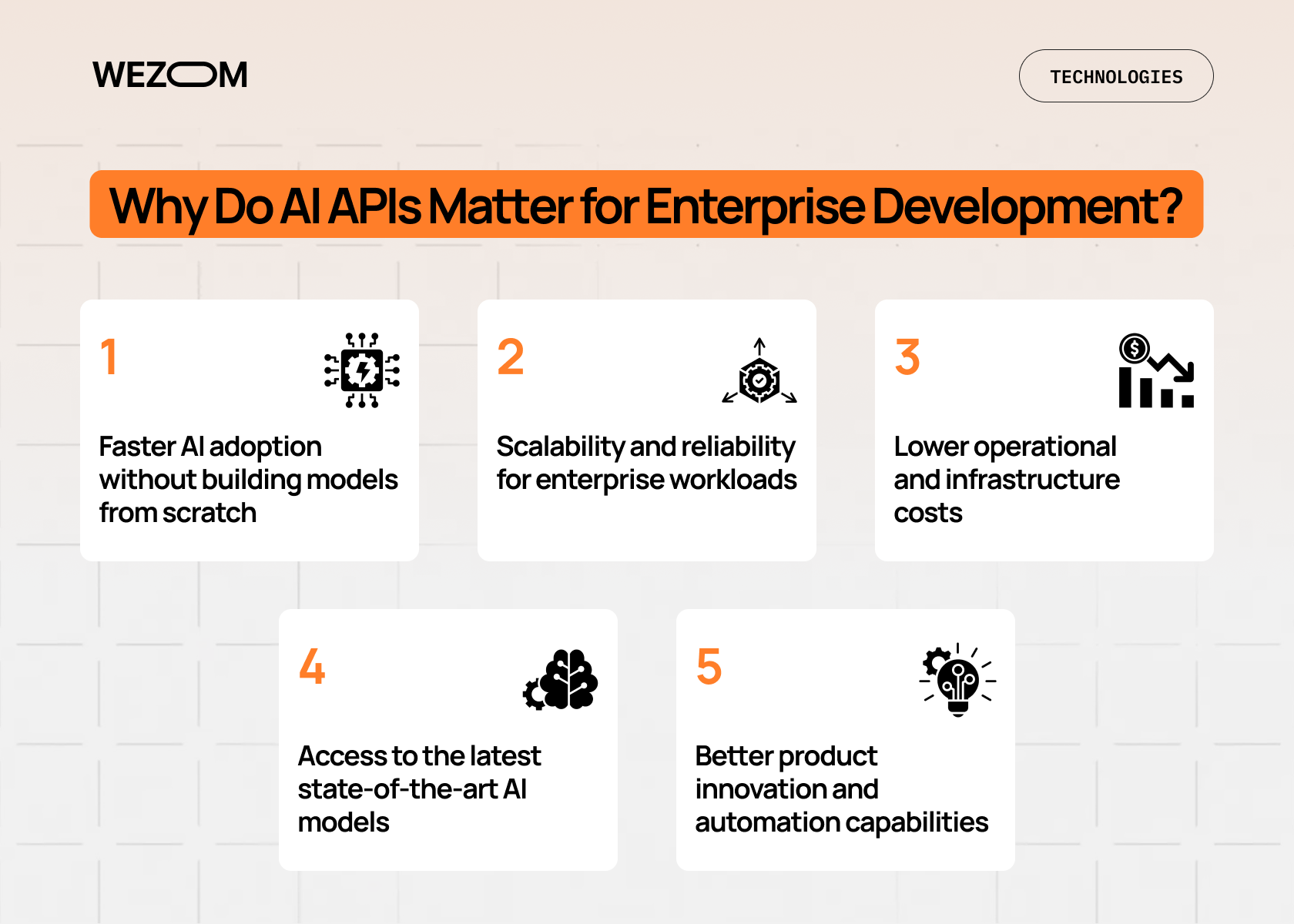

Why Do AI APIs Matter for Enterprise Development?

For the enterprise segment, using ready-made AI APIs is a more cost- and time-efficient alternative to developing custom AI models from scratch. Specifically, before our AI platform comparison, we’d like to highlight the following advantages.

Faster AI adoption without building models from scratch

Using ready-made models via API is currently the only way to launch AI-driven functionality in weeks, not years, as is the case with custom development. Generally, ready-made models like those provided by Google, OpenAI, and AWS are suitable for 95% of enterprise-level business cases, while fine-tuning or creating your own models is an unnecessary waste of resources that only hinders your digital transformation.

Scalability and reliability for enterprise workloads

Scalability implies both the ability to serve more users than planned, stability under peak loads, and adherence to strict SLAs. All the providers discussed below cope with throttling effectively and also offer integration with existing quotas for large enterprise accounts. As for reliability, it’s measured by 99.9% uptime and predictable latency (P99), which is considered critical for real-time applications.

Lower operational and infrastructure costs

Switching to a pay-as-you-go pricing model via the AI API transforms fixed costs into variable ones. For example, according to our calculations, for a mid-sized company, operating an in-house GPU cluster to support a Llama 3 70B-level model is 3-4.5 times more expensive than using similarly powerful API models, since you don't pay for idle hardware, data center electricity, or the labor of MLOps specialists.

Access to the latest state-of-the-art AI models

Giants like Google and AWS invest billions of dollars to ensure their APIs provide access to state-of-the-art models, eliminating the risk of technological obsolescence for your business. The fact is, the release of a new version of GPT or Gemini can instantly devalue all your previous efforts if you're tied to a custom solution. Conversely, using APIs ensures your business always has access to cutting-edge solutions without having to rewrite proprietary code.

Better product innovation and automation capabilities

Using AI APIs allows businesses to implement functions that previously required large technical departments. In particular, well-known enterprise platforms enable businesses to create intelligent agents, which, in turn, opens the opportunity to open fully autonomous support departments and launch intelligent decision-making systems that operate 24/7 with consistently high accuracy.

Overview of Each Platform

Our experts conducted a series of comparative tests to understand where the hype ends and the real business value of each platform – OpenAI, Google Vertex AI, and AWS Bedrock AI – begins.

OpenAI API

OpenAI is considered a leader in complex inference and natural language understanding. For many enterprises, it’s the default choice, primarily due to the maturity of its ecosystem and the predictability of its results. Today, OpenAI focuses on the development of multimodal and autonomous reasoning models:

- GPT-4o (Omni) – a flagship model that combines text, audio, and vision into a single neural environment;

- The o1 family (o1-preview and o1-mini) – a new generation of models that use reinforcement learning to consider responses before generating them; they demonstrate an average 35% reduction in errors compared to previous versions by eliminating hallucinations;

- GPT-5 (currently in development) – according to our inside information, this version is focused on long-term planning and autonomous task execution, as it will represent Agentic AI.

Open AI's strengths include:

- Reasoning. OpenAI is a leader in tasks requiring deep text analysis and sophisticated instructions;

- Assistant API ecosystem. This ecosystem enables the creation of autonomous agents with memory in just a few lines of code;

- Tooling and function calling. OpenAI boasts the best implementation of external function calling on the market, which is especially important for integrating AI with ERP and CRM systems.

As for limitations, these include the high price for tokens (especially for the o1 series models), which risks making large-scale projects unprofitable without end-to-end optimization, as well as strong vendor lock-in, as switching to other platforms often requires a complete rewrite of prompts and data processing logic due to the specific API formats.

Google Vertex AI

Google Vertex AI is an enterprise platform integrated into Google Cloud that focuses on working with too large datasets. Let's briefly review two key models of the Gemini family, which are most suitable for the enterprise sector:

- Gemini 1.5 Pro – a model with a very long context window of up to 2 million tokens;

- Gemini 1.5 Flash – a speed-enhanced and pricing-optimized model for real-time workloads.

Google Vertex AI's strengths include:

- Native multimodality. Gemini was initially trained on video, audio, and text data simultaneously, making it ideal for analyzing video streams or call recordings;

- Google Cloud Ecosystem. This ecosystem provides seamless integration with BigQuery and Google Drive, so if your business already relies heavily on Google Cloud, implementing Vertex AI is a logical step forward.

- Long context window. With this vendor, you'll be able to load the entire knowledge base into the model without chunking.

Now, a few words about the limitations: first, the complexity of pricing, which is tied to the region, deployment type, and the need for additional security features. The second one is the complexity of integration, as working with Vertex AI outside of the Google Cloud infrastructure can be too complex due to strict authorization and network privacy requirements.

AWS Bedrock

Amazon created Bedrock, a platform that provides access to top models from the world's leading developers through a single interface. It’s the best choice for those seeking flexibility without compromising business security. Today, AWS Bedrock is unique in that it offers a "mix" of the best solutions:

- Anthropic Claude 3.5 Sonnet/Opus – a direct competitor to GPT-4 with a more human-readable writing style and high coding speed;

- Meta Llama 3.1 – extremely powerful open-source models for those who want to retain control over the architecture;

- Amazon Titan – Amazon's proprietary models optimized for enterprise search workloads.

Bedrock's unique advantages include:

- Security and compliance. Bedrock is fully compliant with HIPAA, GDPR, and SOC standards, which is critical for highly regulated sectors such as healthcare, finance, and government;

- Hybrid cloud. This allows you to combine different models (for example, Claude for text and Llama for other tasks) in the same application.

- Convenient fine-tuning. AWS offers advanced tools for model fine-tuning on your private data.

As for disadvantages, Berok is considered an engineering-heavy solution because it requires significant expertise in AWS architecture (meaning it's not an API that can be connected in minutes). It's also worth noting that some advanced model features (for example, specific Claude modes) may work in Bedrock with a slight delay compared to their native APIs.

AI Platform Comparison: Key Capabilities

The gap between a demo and a production-ready solution often lies in the way the API is implemented – which is why it's so important to evaluate each of these enterprise machine learning platforms using stress tests in real-world conditions.

Model quality and reasoning performance

Although all three vendors have GPT-4-level models, their underlying cognitive profiles differ. Specifically, the o1/GPT-4o models excel at complex logical chains, Anthropic, available through AWS, demonstrates superior results in programming and ethical compliance, while Gemini is top at processing massive amounts of unstructured data.

Fine-tuning capabilities

If you need your enterprise AI solutions to have a brand-specific tone of voice or understand the peculiarities of your niche, fine-tuning is a must. Bedrock, in particular, provides the most in-depth fine-tuning AWS AI tools with full data isolation, while Google Vertex AI is well-known for its flexible training parameter tuning via a user-friendly UI. As for the OpenAI API enterprise plan, it offers a simplified fine-tuning process for GPT-4o, although its hyperparameter tuning capabilities are limited.

Embeddings and vector search

For RAG systems, the quality of vector representations is considered a crucial selection parameter. In this context, Google offers the text-embedding-004 model, which is natively integrated with Vertex AI Search. AWS offers the Cohere and Titan models, which allow for flexible vector size selection based on budget. At the same time, OpenAI's text-embedding-3-small/large model is considered ideal in terms of price-to-performance ratio.

Multimodal features

Today, enterprise solutions must be able to not only read, but also see and hear. Google Gemini 1.5 Pro is the clear leader in this area, thanks to its ability to analyze hours of video or audio without the need for prior transcription. OpenAI GPT-4o is also noteworthy, as it works great when analyzing static images and documents. As for AWS Bedrock (specifically, Claude 3.5 and the proprietary Titan models), it's almost catching up with its competitors, offering highly accurate OCR and visual data analysis.

Developer experience and tooling

Development ease directly impacts the speed of releasing new business features, and OpenAI maintains its leadership in this regard, primarily due to its simple documentation. Their Playground is essentially the gold standard, enabling quick testing of prompts and parameters. Meanwhile, Google Vertex AI provides Vertex AI Studio, a Google Cloud component and a powerful tool for MLOps. It allows you to manage model lifecycles, conduct side-by-side tests, and configure security in a single interface. Finally, a few words about AWS Bedrock: its interface is rich but still requires some getting used to. It has plug-and-play builders for workflow automation without writing hundreds of lines of code.

Security, compliance, and data privacy

In all three of these aspects, AWS provides maximum control, as your data remains within your VPC. Google, on the other hand, guarantees that data isn't used to retrain global models and provides powerful access management tools via IAM. Finally, OpenAI, through Azure, provides enterprise-grade security that meets Microsoft standards.

Latency and scalability

In the enterprise segment, it's crucial to use a model with truly high performance – that's why we tested the first token generation speed and overall throughput. Google, using its own global fiber network and TPU accelerators, demonstrates impressive speeds for the Gemini 1.5 Flash model, making it ideal for real-time chats. As for AWS, its strong point is its Provisioned Throughput, which allows you to reserve a certain amount of bandwidth to guarantee stable latency even during global peak loads. Despite OpenAI's enormous capacity, users sometimes face latency fluctuations during periods of high server load, especially on Tier 1-2 accounts.

Integration flexibility (SDKs, ecosystem tools)

Integration flexibility determines how easily AI fits into your existing IT infrastructure. In this context, OpenAI's SDKs for Python and Node.js have become the de facto industry standard, and many open-source tools are now developed primarily with OpenAI support. A word about Google: this solution is suitable if your stack is based on Google tools – for example, native integration with BigQuery allows AI models to analyze data directly within the data warehouse, while integration with Firebase simplifies the creation of mobile apps. Finally, AWS offers the deepest integration with enterprise infrastructure via S3 for data storage, Lambda for serverless computing, and Step Functions for process orchestration. The boto3 SDK for AWS is also a powerful tool for DevOps engineers.

Pricing Comparison

Let’s check our AI API comparison table that highlights both average token pricing (for 2025) and hidden expenses.

| Provider | Price per 1 million of input tokens | Price per 1 million of output tokens | Extra costs |

|---|---|---|---|

| OpenAI (GPT-4o) | ~$5.00 | ~$15.00 | Rate limits for API |

| Google (Gemini 1.5 Pro) | ~$3.50 | ~$10.50 | Vertex AI storage |

| AWS Bedrock (Claude 3.5) | ~$3.00 | ~$15.00 | Provisioned Throughout |

Ultimately, the main factors determining the total cost of ownership of a specific API include:

- Compute costs (essentially the cost of infrastructure for query processing and caching);

- Storage (necessary for storing vector databases and context);

- Engineering hours (i.e., hours to implement an API integration – by the way, according to our insights, AWS requires approximately 20% more time for initial security configuration than OpenAI).

Overall, even a quick glance at the table will show that switching to Gemini for low- and medium-complexity tasks will allow you to reduce your AI operating costs by 50-60% without losing quality compared to GPT-4.

Best Use Cases for Each Provider

Now, let's look at specific use cases where each of the above platforms shines:

- OpenAI is the optimal combination of intelligence and speed. This is precisely why it's often chosen for implementing complex chatbots, automating content creation, and analytics that require deep inference. Overall, it offers the fastest learning curve for developers and the best cognitive capabilities available out of the box.

- Google Vertex AI is the best solution for working with large data volumes. This makes it an excellent choice for implementing AI-powered search across huge corporate knowledge bases, analyzing long videos, and multimodal applications. All this is made possible by a giant context window and native integration with Google's data ecosystem.

- AWS Bedrock is a top choice if the security of your corporate data is paramount. This defines the solution's broad range of use cases: they all relate to fintech, healthcare, government (and other highly regulated industries), as well as complex backend systems with hybrid cloud architectures. Bedrock's security is primarily determined by its full regulatory compliance and flexibility in choosing models.

Unsure which of the solutions described above is best suited for your business needs? Or perhaps do you need a reliable IT partner who can implement API integration? In that case, write or call us!

Key Factors for Enterprises When Choosing an AI API

In this section, we invite you to consider the factors that determine the success of AI implementation over a 3-5 year period.

Security and compliance

We divide this factor into three levels: data residency (that is, the ability to store and process data in a specific region in accordance with local governance), zero data retention (the presence of a mode in which the provider undertakes not to save your prompts even for a second after generating a response), and certification (most often, this is the presence of HIPAA, FedRAMP High, and SOC 2 Type II).

Model performance and reliability

Reliability in the enterprise sector means both the absence of hallucinations and the stability of responses. Specifically, our consistency audit, based on the same technical query executed 1,000 times, showed that GPT-4.1 models (o1-series) exhibited the lowest level of data drift – less than 2%.

Vendor lock-in vs model portability

The main risk for businesses with scaling IT infrastructure today is locking in to proprietary methods (for example, OpenAI's Assistants API). If you're facing this problem because your model offers capabilities unavailable to competitors, you should implement the AI Gateway abstraction layer, API gateway, which will allow you to switch to a different model in minutes.

Infrastructure load and cost

Enterprise solutions require accounting for hidden costs, including egress fees (the cost of retrieving data from the cloud) and vector DB storage (used to store vectors for RAG systems). From this perspective, you may consider Google Vertex AI in conjunction with BigQuery – this can reduce the cost of transferring data between the analytical warehouse and AI by 30-40%.

SLA requirements

Standard 99.9% uptime isn't enough today – it's important to understand the latency SLA as well. For example, we found that AWS Bedrock, through Provisioned Throughput, guarantees a first token time of less than 200 ms even during peak load periods in the US, something that's impossible to achieve with OpenAI and Google's basic plans.

Data governance

Finally, it's important to understand who has access to the data and how the origin of responses is tracked. For maximum transparency, we recommend implementing an audit system that logs both the prompt and the model metadata, as well as the scale version. This is critical for highly regulated industries, where every AI decision must be explainable.

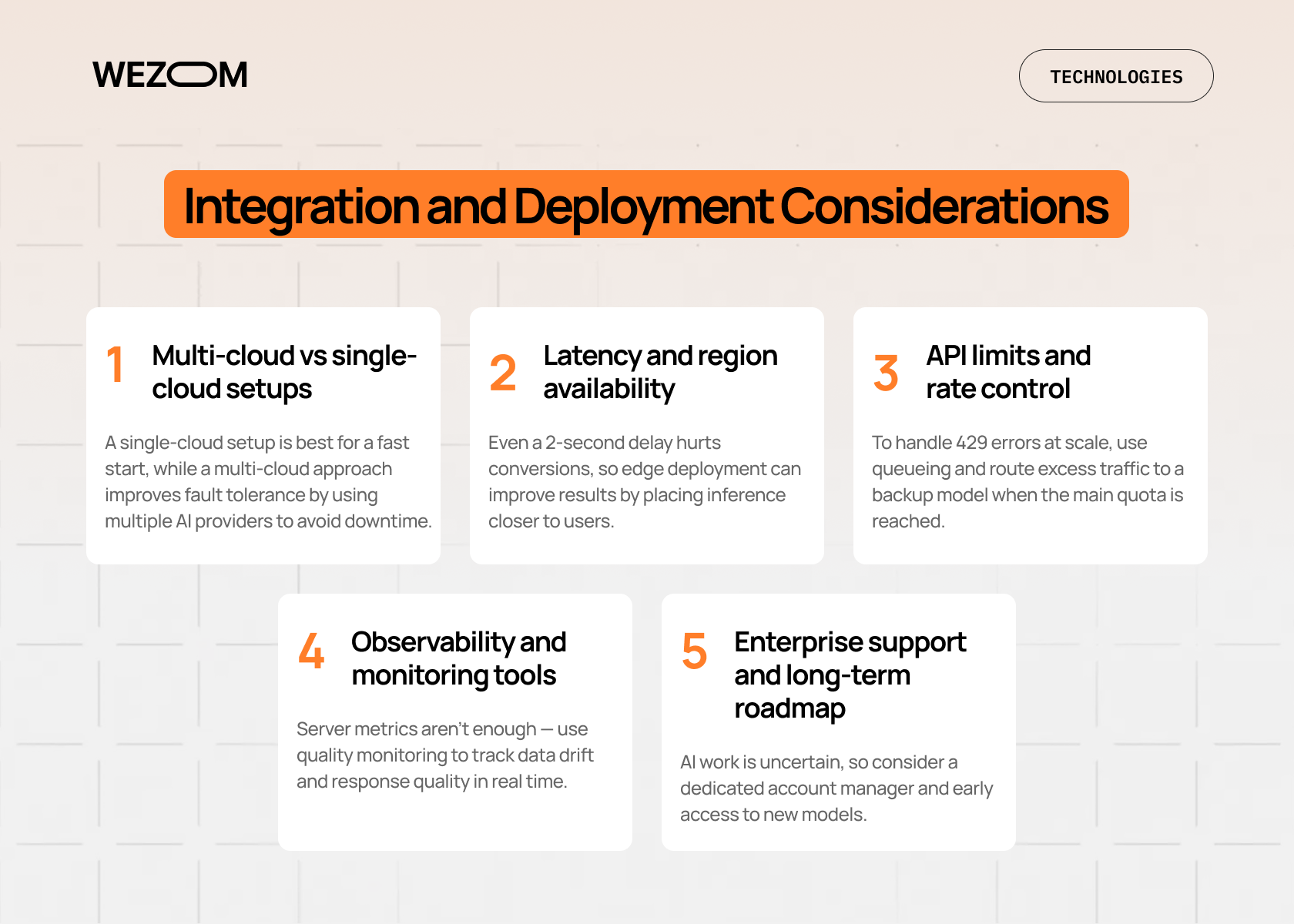

Integration and Deployment Considerations

Finally, it’s time to consider the most important integration and deployment aspects you must keep in mind.

- Multi-cloud vs single-cloud setups. While a single-cloud setup is considered ideal for a quick start (in this case, you rely on native tools from start to finish), a multi-cloud setup makes sense when you need to ensure 100% fault tolerance (companies typically use at least two different AI providers to avoid business interruption if one fails, such as during the large-scale API outages we saw in 2024).

- Latency and region availability. A 2-second delay can kill conversion rates, for example, in a chatbot – that's why it's sometimes better to use edge deployment to bring model inference as close as possible to the end user, no matter where they are.

- API limits and rate control. One of the main challenges when scaling is the 429 (Too Many Requests) error. As an optimal solution, you can implement a queueing strategy, which automatically redirects excess traffic to a backup model (for example, from GPT-4 to the cheaper but faster Gemini Flash) when the primary quota is reached.

- Observability and monitoring tools. Traditional server monitoring isn't enough – you need quality monitoring as well. In particular, we recommend the Datadog AI and Monte Carlo combination for tracking data drift and response quality in real time.

- Enterprise support and long-term roadmap. Working with AI is a constant state of uncertainty. That's why you should consider hiring a dedicated account manager and purchasing access to closed beta tests of new models (for example, GPT-5 or future iterations of Claude).

If you need a reliable technology partner who will handle all the challenges associated with the Generative AI API – from choosing the right model to its integration, customization, and support – feel free to contact us.

FAQ

Do AI APIs guarantee data privacy?

Yes, they do – when choosing Enterprise accounts, the above-mentioned providers legally confirm that your data won’t leave the secure environment and won’t be used for general models’ retraining.

Which AI API is best for enterprise-scale applications?

AWS Bedrock excels in handling ultra-high loads and predictable behavior thanks to its Provisioned Throughput option; however, if you plan to work with massive data volumes, Google Vertex AI is the best choice.

Which platform provides stronger security and compliance options?

The AWS Bedrock vs OpenAI couple offers the most comprehensive access control and network isolation tools used by the world's leading corporations.

Which AI API provides the best value for long-term enterprise projects?

The best total cost of ownership today comes from lightweight models. Let’s remember the OpenAI vs Google comparison – the models GPT-4o mini and Gemini 1.5 Flash are lightweight and can always be combined with more powerful models for solving complex problems.

Which provider has the best multimodal capabilities?

Google is currently considered the leader, as its Gemini 1.5 Pro models can perform analytics of hours-long video and audio streams with unprecedented accuracy out of the box.