Providing a strong competitive advantage for a digital solution is a complex and ongoing process that is largely achieved through the effective application of QA methods and practices.

In a nutshell, quality testing/quality assurance (QA) is one of the integral stages of software development. It ensures that the software solution complies with a list of technical and non-technical requirements that describe its visual part, functionality, integration features, compatibility with specific OSes and users’ devices, deadlines, budget, scaling capabilities, accessibility, fault tolerance, standard compliance, etc.

Since this stage, like any other, has certain time constraints, it is critical for development teams to be able to choose the right approach that would provide coverage of the entire program code and the absence of the highest-priority bugs. Below, we will consider the specifics of the quality assurance software testing process and share the best practices that allow us to achieve excellent results from one project to the next.

What Is QA testing?

Quality assurance or QA testing is a set of actions covering all stages of software development, release, and post-deployment. These are activities at all stages of the software life cycle that are undertaken to ensure the required level of quality of the launched product.

The central people in the software quality testing process are testers (or QA engineers) – specialists who test software in order to identify errors and defects. They conduct various types of testing such as functional, integration, system, performance, etc., developing test cases and checking them against the specifications of a particular software solution. They also file and document bugs found, as well as check whether they are fixed before the product is released.

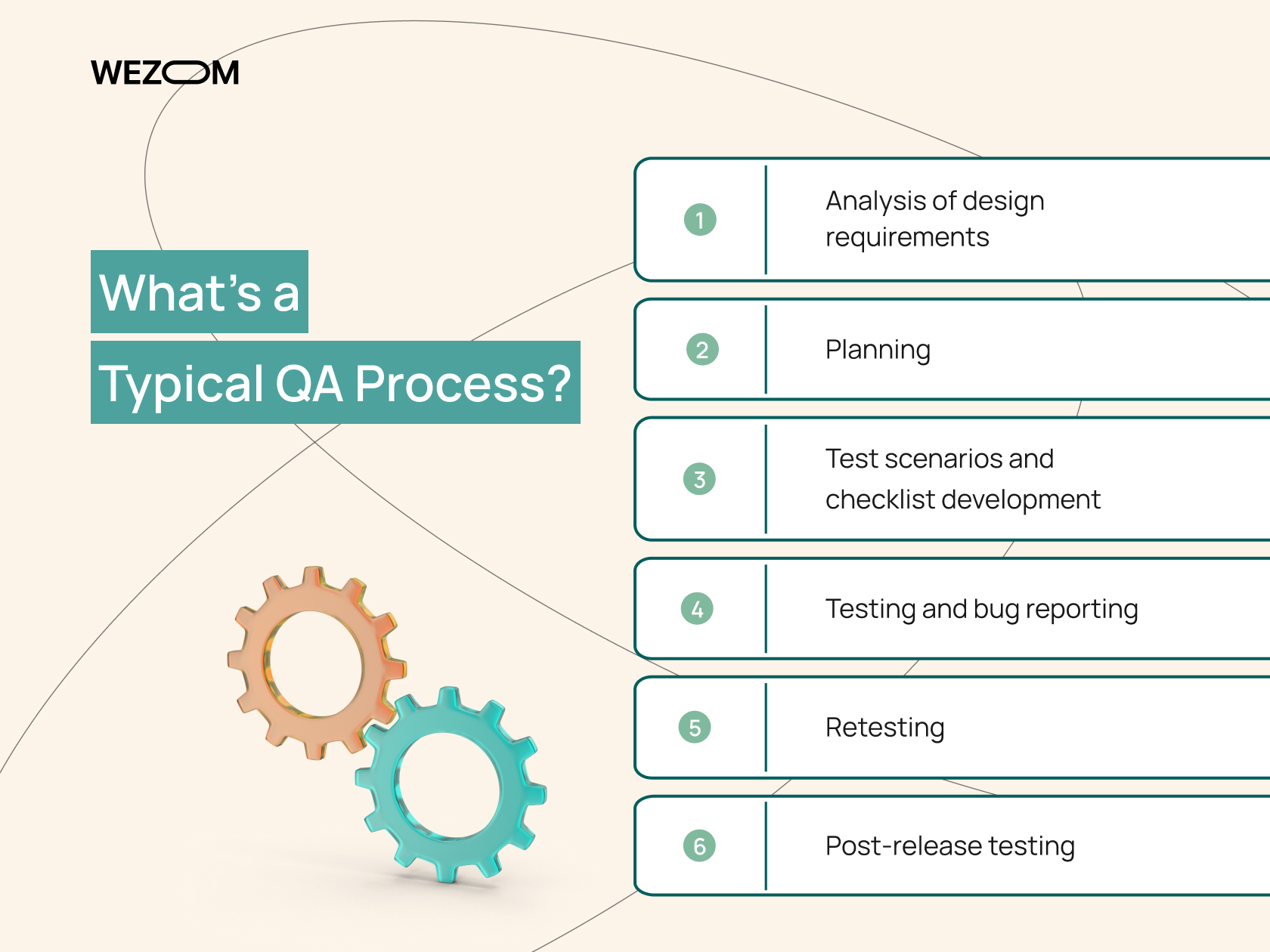

What's a Typical QA Process?

Let's look at six key steps that are the default for a typical software quality assurance process.

Analysis of design requirements

First, testers carefully analyze the list of specifications for the product. This is necessary in order to ensure the possibility of early bug fixation – thus, you will able to avoid a significant increase in the project budget and its delayed launch. In addition, it promotes early development of test cases as the testing team will begin to work long before at least one part of the project is ready to be tested.

Planning

Now, having a detailed idea of what the final solution should be, testers can begin planning the testing process. Here, in addition to testing approaches/levels/tools and prioritization of individual features/modules of the project, they must also determine the budget and deadlines. Also, at this stage, tasks for individual testers are assigned.

Test scenarios and checklist development

At this stage, everything is ready for the testing team to begin developing test scenarios and checklists. As they are completed, testers compare the expected results with the received ones.

Also, as an option, if autotests are planned, QA engineers must first prepare the environment so that it is identical to the one in which the final solution will be used.

"When developing test scenarios and checklists, we prioritize thoroughness to ensure that our testing processes are not only streamlined but also highly accurate. The consistency of the test environment, especially when employing automated testing, is crucial. This approach allows us to align our tests closely with production settings, yielding results that are predictive and relevant, thus minimizing deployment surprises. This meticulous preparation is key to the reliability of our testing outcomes." (с) Quality Assurance Lead, Functionize

Testing and bug reporting

The SQA process itself begins with a modular approach at the level of individual code components. After that, QA engineers perform testing at the API and UI levels. All detected bugs are recorded in the bug-tracking system.

Retesting

The obtained results of the initial testing are transferred to the developers who must fix the detected bugs. After this, testers have to test the project again – both those features in which bugs were found, and other components that are connected to them, to make sure that fixing errors did not provoke the emergence of new bugs.

Post-release testing

After retesting a specific part of the design is completed, steps 4-5 are repeated for the next components. The testing team also conducts smoke tests to check the project’s stability. Upon their successful completion, a report is generated.

Our Best Practices for QA Testing

Now, we invite you to consider the best quality control testing practices that help us create high-quality products.

Identification of potential areas for improvement

To do this, analyze the product and the processes associated with its development to identify potential weaknesses or areas for improvement. We also hold meetings with the development team and other specialists to discuss ideas and suggestions for improving the product. The same meetings also take place within the QA team to consider optimization opportunities.

Hypotheses formulation

Next, we formulate hypotheses based on identified needs and opportunities for improvement. This helps us act more consciously at each new stage of the project’s development and improves the quality of decisions made.

Research planning

Our testing plan always includes the quality testing methods, tools, and resources necessary to test hypotheses. Also, at this stage, we establish success criteria that will help in evaluating the test results.

Testing

Testing is carried out according to the developed plan. During testing, we use various testing methods such as functional, performance, user experience ones, depending on the nature of the hypothesis.

Data collection and analysis

This stage involves collecting data on test results. We analyze this data to evaluate the results achieved and identify possible problems or areas for improvement.

Conclusions and recommendations

We formulate conclusions based on results. These findings are then discussed with the team, after which each team member has the opportunity to offer specific recommendations for further action based on the findings.

Iteration

Finally, we apply the findings to further improve software quality assurance processes and the product itself. The research and testing cycle is repeated as many times as the project deadlines allow.

In addition, we would like to pay special attention to the choice of software QA testing tools. In particular, initially, we used the Selenium autotesting tool, but after analyzing the capabilities of Playwright, we decided to use this library in the future. We like Playwright for the following characteristics:

- Multitasking and asynchrony. Playwright provides asynchronous methods for interacting with the browser, which improves performance and allows us to work efficiently across multiple browser tabs or windows. Selenium also provides asynchronous methods, but they are not built into the core APIs.

- Supports all modern browsers. This QA software ensures native support for all popular browsers, including Chromium, Firefox, and WebKit, ensuring extensive testing capabilities across multiple platforms. At the same time, supporting new browser versions in Selenium can be time-consuming.

- Convenience of software testing on mobile devices. Playwright provides convenient APIs for emulating mobile devices with different screen resolutions and other parameters, making responsive design testing more convenient. Selenium also supports mobile device emulation but may require more configuration effort.

- Screenshots and video recording. Playwright makes it easy to take screenshots and record videos of tests running, making it easier to debug and analyze results. In Selenium, this feature is quite difficult to use.

- Support for browser events. Playwright automatically handles browser events such as dialog boxes, notifications, and others, reducing latency. As for Selenium, it may be less performant due to the architectural features of WebDriver.

- Built-in test debugging. The library offers tools for built-in test debugging, including the ability to pause execution and view browser state at specific steps. Selenium also has debugging tools, but they are less rich compared to Playwright.

- Flexible control over network requests. Playwright provides an API to control network requests, allowing us to effectively test various server interaction scenarios. As for Selenium, they are not flexible enough.

- Active community and regular updates. The library is actively supported by the Microsoft development team and has an active community. Regular updates and new functionality ensure the tool is relevant for modern projects. As for Selenium, due to its popularity, updates are implemented less frequently.

This library also has many unique features, such as built-in support for HTTP/HTTPS proxies, default support for browser events, extensive support for programming languages (including Python), structured access to elements of project pages, support for headless mode, integration with popular continuous integration tools and delivery, such as Jenkins, CircleCI, GitLab CI, etc.

Overall, Playwright has significant advantages in performance, browser support, usability, and active community that justify choosing it over Selenium.

Conclusion

We hope that this article helped you understand what quality assurance is and also introduced the QA best practices adopted in our company, WEZOM. If you would like to entrust the quality assurance process for your software (or the entire project) to us, write or call us right now.