Model architecture and training approach

We implemented a tool based on natural language processing for Twitter sentiment. It was built on the transformer models BART-large-MNLI (for topic classification) and DeBERTa-v3 (for sentiment analysis). We trained both models on a manually labeled sample of 3,000+ English-language tweets collected by key hashtags related to the war in Ukraine, including #ukrainewar, #standwithukraine, #donbas, #nato, #invasion, #refugees, #putin, #zelensky, etc.

Data processing and preparation

We implemented data collection through the Twitter API using a scalable pool of accounts. At the preprocessing stage, we filtered irrelevant messages by several criteria: we removed spam, bot activity, and duplicates (heuristically and using Pandas), excluded all multimedia attachments and external links, and set the analytics to only English-language content via automatic language detection.

.png)

Pipeline setup and training

The analytical pipeline was built in Python using the HuggingFace Transformers, Pandas, and Scikit-learn libraries. To assess the model’s quality, we focused on the Accuracy, Precision, Recall, and F1-score metrics, which were assessed on validation and test subsamples. We also used cross-validation and subsequent error analysis on independent data.

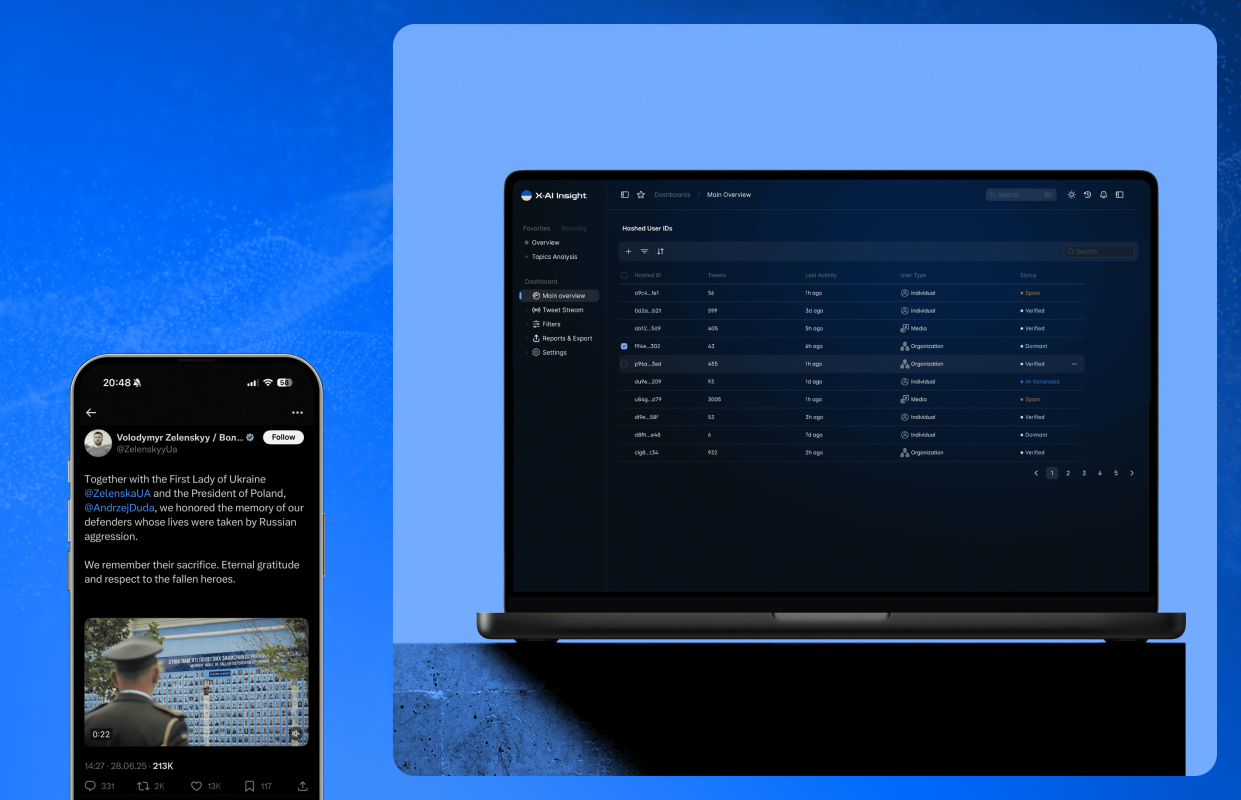

Data output and visualization

The system for tweet sentiment analysis using machine learning stores only the text of tweets and hashed user IDs, allowing us to ensure full compliance with GDPR requirements. Data visualization is implemented through a dashboard with thematic and emotional analytics in real time. This enterprise-level Twitter data analysis solution is currently being used by the client's analytics department.

Python

- Python – this programming language was chosen due to its versatility and powerful support for working with NLP, APIs, and data analysis tools. Its flexibility allowed us to combine all the project components into a single architecture, including tweet collection and analytics visualization.

BART-large-MNLI and DeBERTa-v3 models

- BART-large-MNLI and DeBERTa-v3 models – BART-large-MNLI was used to classify tweets by topic (e.g., refugees, sanctions, war, etc.) based on multi-topic entropy features, while DeBERTa-v3 was used to determine the sentiment of messages (positive, negative, or neutral), taking into account the context and lexical distortions typical of social networks. Both transformer models for classifying tweets in wartime context were further trained on a manually labeled set (3,000+ tweets), which ensured high accuracy and adaptation to the topic of the conflict.

Pandas, Scikit-learn, Matplotlib, and Tweepy libraries

- Pandas, Scikit-learn, Matplotlib, and Tweepy libraries – we chose Pandas for data preprocessing, cleaning, and aggregation (including filtering out duplicates, floods, and bots), Scikit-learn for calculating metrics (Precision, Recall, and F1) and building auxiliary models for cross-validation, Matplotlib for generating graphs and auxiliary visualizations offline, and Tweepy for accessing the Twitter API and scalable collection of English-language messages by hashtags and keywords.

GDPR compliance

- GDPR compliance – for this, we implemented hashing of all user IDs and stored only the texts of messages. We also excluded the processing of personal data, which made this Twitter analytics platform fully compliant with GDPR requirements and applicable in strictly regulated environments.

User-friendly interface

- User-friendly interface – we have developed a web dashboard with interactive charts that analysts can monitor in real time. In particular, users can track the distribution of tweets by topic and tone, the dynamics of sentiment by day/week, as well as key activity spikes. This AI-based tweet classification service for geopolitical topics is fully integrated with the client’s internal analytics systems and is already used in regular work.

Real-time analysis of public opinion in wartime

The sentiment analysis platform for war-related tweets we created allows the client to automatically classify tens of thousands of messages by topic and tone, helping analysts track sentiment and trends in social networks. Thus, this English-language tweet analysis tool using BERT or DeBERTa can be especially valuable for other editorial offices, media, and think-tank centers as well.

Reliability, scalability, and extensibility

Thanks to its flexible architecture, this AI solution for analyzing Twitter data can be adapted to new platforms (for example, Reddit, Telegram, or YouTube) without radical changes in its core. High-quality filtering of bots and fakes makes this custom NLP solution for analyzing war-related social media posts especially useful in information warfare. The dashboard is already used in analytical tasks related to the X social network.