Model architecture and training approach

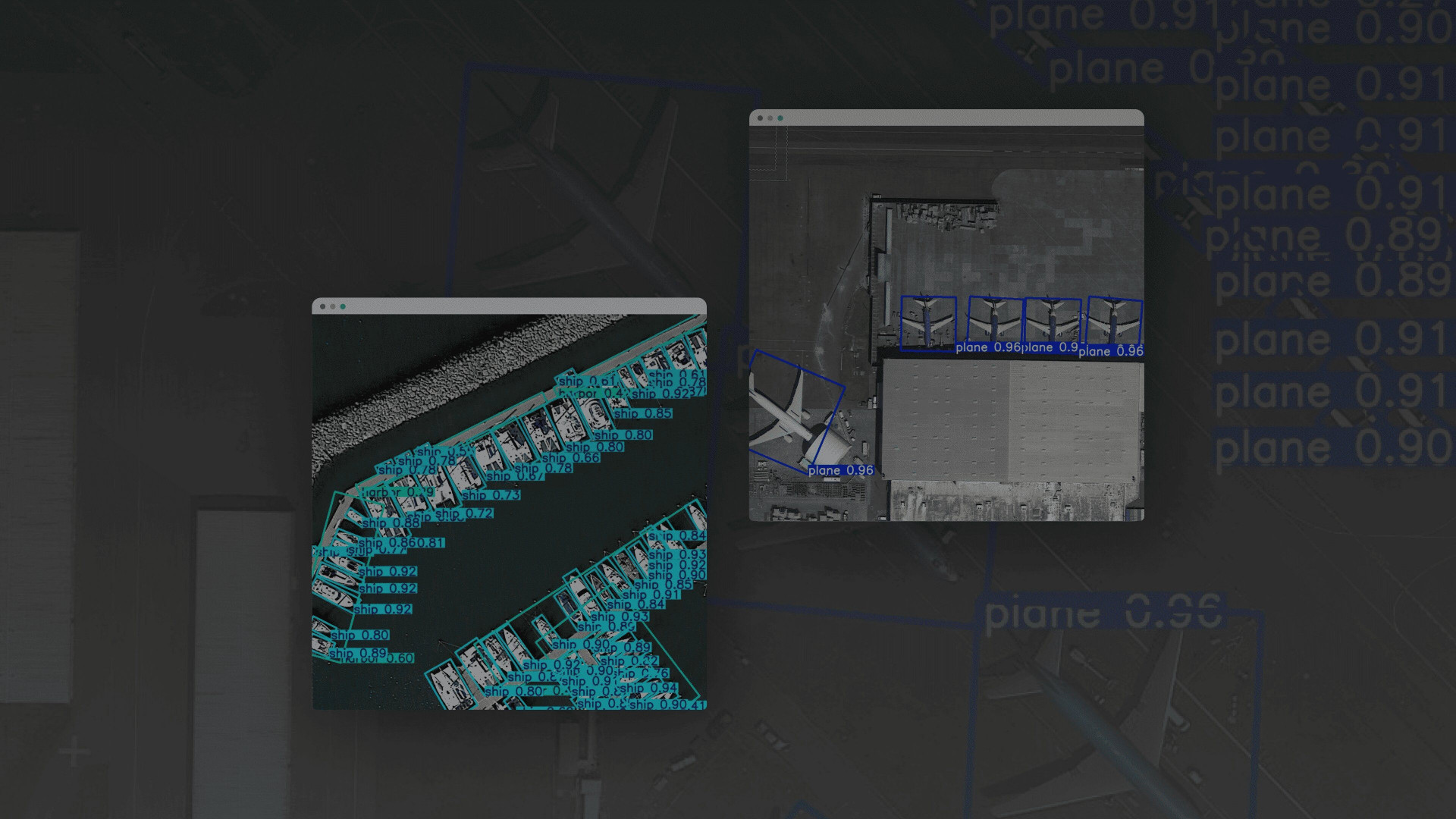

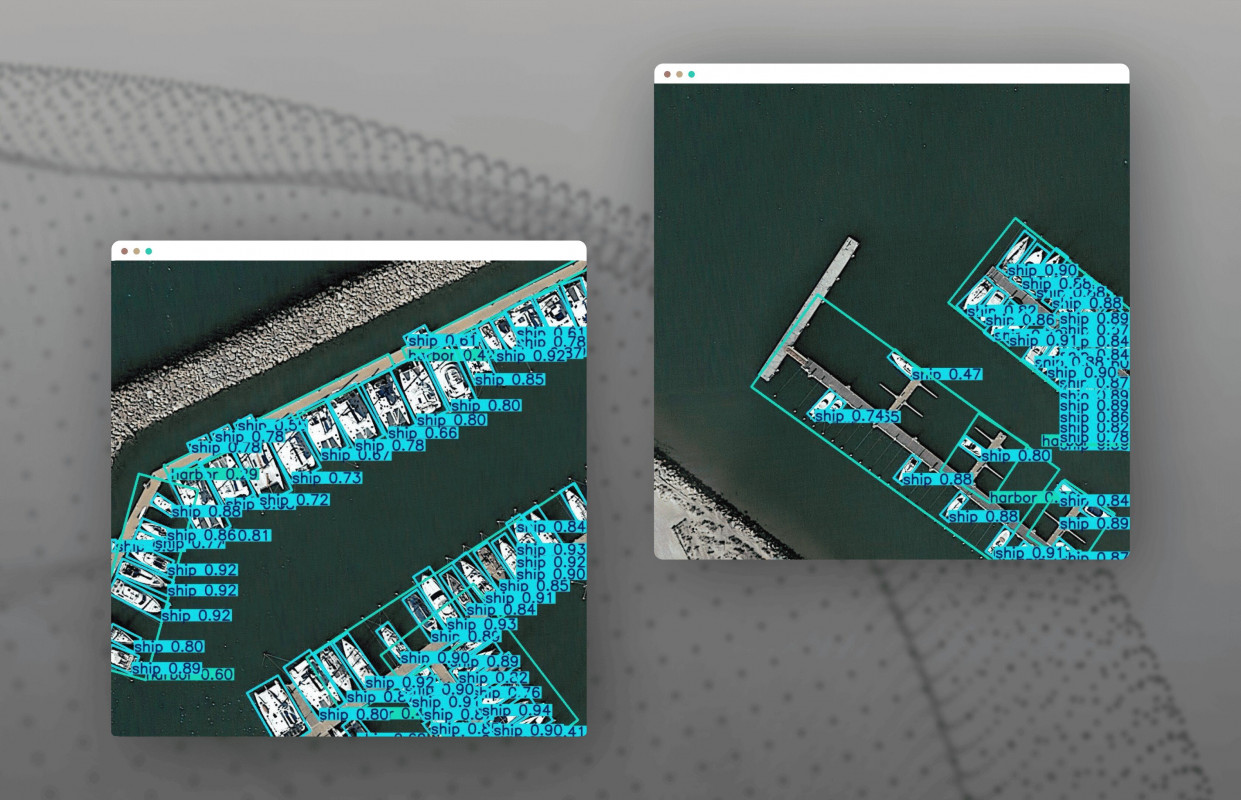

We based this solution on the YOLOv8 architecture, originally aimed at strategic object detection in drone imagery. We also used transfer learning based on the pre-trained YOLOv8 model and further trained it on the DOTA dataset, specially designed for working with remote sensing data.

Model customization and target classes

To achieve high accuracy, we customized the AI model by redesigning the head layer and making classifier configuration changes for specific classes: aircraft, helicopters, tankers, refineries, trucks, ports, etc. We also provided support for oriented bounding boxes (OBB), which was essential for identifying objects photographed at an angle.

.jpg)

Tech stack and pipeline implementation

The PyTorch YOLOv8 object detection implementation was based on the pipelines using the PyTorch stack (ML core), OpenCV (for aerial surveillance image processing and preprocessing), and FastAPI (user interface). We built image processing as a batch process (batch inference) with REST access, so that the inference results could be automatically saved to the database for further analysis and visualization.

Specifics of aerial photographs processing and output format

Since aerial photography varies in scale, source, and shooting angle, a special approach to data normalization and scaling is required. For this, we ensured that the system returned the exact coordinates of the bounding boxes with object class labels at the output. Thus, the system can be used for analytics and reconnaissance with minimal manual processing.

PyTorch

- PyTorch – we chose it as the main ML framework due to its flexibility, well-developed ecosystem, and native support for YOLOv8 models. It allowed us to effectively implement transfer learning and model customization.

YOLOv8

- YOLOv8 – we settled on the YOLOv8 based object detection services to ensure the model’s high accuracy with low latency needed for real-time aerial imagery analysis automation for defense.

OpenCV

- OpenCV – we used this solution for preprocessing input images, as well as visualizing results, due to its speed and extensive capabilities for working with images, including support for oriented bounding boxes.

NumPy

- NumPy – we chose this basic library for numerical operations due to its capabilities for processing image arrays, normalizing input object detection datasets for aerial imagery, and transforming object coordinates.

FastAPI

- FastAPI – our software engineers settled on this lightweight, asynchronous framework to create a real time object detection API to access the inference of the custom AI model for satellite and aerial imagery. It ensured quick integration into the existing infrastructure and opened up great scalability opportunities.

PostgreSQL

- PostgreSQL – we chose this relational DBMS to store inference results, processing logs, and metadata. It ensured easy integration with BI tools and external analytical platforms.

JSON

- JSON – we decided to return the resulting bounding boxes with object classes in a structured JSON format, which ensured convenient parsing integration with other systems (e.g., dashboards, GIS interfaces, and situational centers).

As a part of the FastAPI image processing pipeline development process, we implemented the validation and test sets, where the model achieved the following accuracy: [email protected] = 80%, precision = 0.82, and recall = 0.80. Overall, these are excellent results for multi-class object detection on satellite and aerial images. These metrics are crucial in situations where recognition accuracy is paramount and false positives must be minimized. The inference speed averaged 0.3 seconds per image, making it possible to use the solution in real-time or near-real-time batch analysis mode for large datasets.

Flexibility, scalability, and readiness for further scaling

On a test sample of 1,000 images and more than 200,000 labeled objects, the system demonstrated stable real time object detection in aerial photos without quality degradation. Thanks to the use of the transfer learning approach and drone data processing with computer vision, the solution does not require manual labeling of new datasets – additional training on a small number of examples is sufficient. Support for oriented bounding boxes allowed the system to effectively handle images where objects are tilted or oriented at an angle, which is typical in real-life aerial photography.

What's next

As part of the solution’s further evolution, we work on it in the following directions:

- Implementation of active learning mechanisms – the custom AI model for remote sensing will be able to be trained on errors identified during operation, without the need for full manual annotation (which will lead to increased accuracy);

- Integration with GIS platforms and situational centers – for using this image recognition software for aerial mapping in real-life operational scenarios, both in the civilian and military sectors;

- Addition of new object classes – the architecture will be able to adapt to new types of targets, which is crucial in the context of constantly changing tasks;

- Scaling to GPU clusters – it will allow this AI powered image annotation and detection service to process large archives of aerial photographs in real time or with minimal latency, which is needed when monitoring dynamic events.